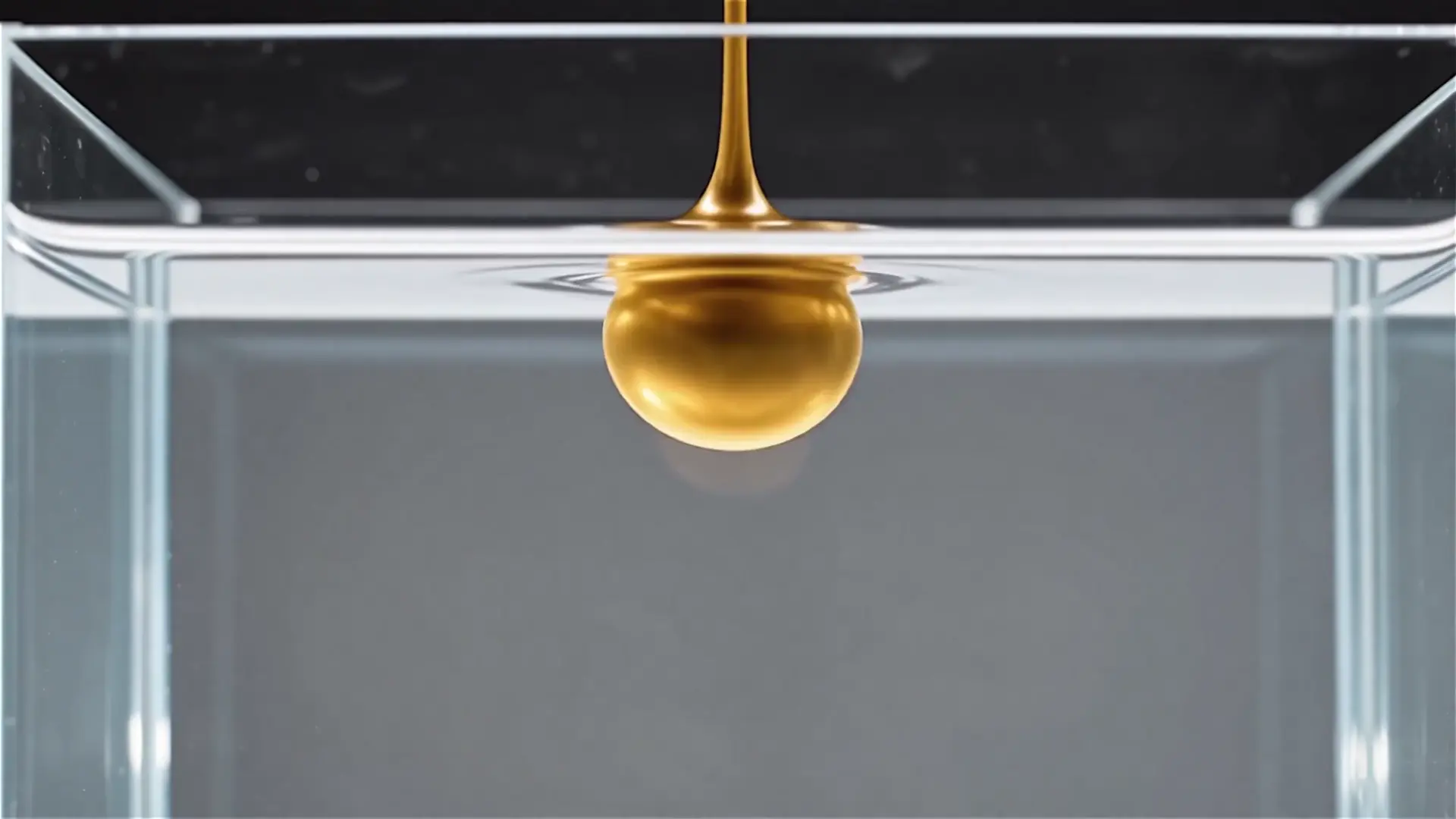

Create fluid, expressive animations with multi-shot storytelling features.

wan 2.6 reference-to-video on RunComfy is a production-grade AI video-to-video engine that takes reference videos and a descriptive prompt to generate cinematic videos with consistent motion, subject identity, and audio-visual alignment. It is designed for re-styling existing footage, motion-guided storytelling, and reference-accurate video generation.

Output format: MP4, MOV, or WebM at up to 1080p and 24 fps.

| Name | Type | Required | Description |

|---|---|---|---|

prompt* | string | Yes | Text prompt describing the desired scene, motion, and style (≤2000 characters). |

audio_url | video_uri | No | Reference video file used to guide motion and content (file size < 15 MB). |

duration | integer | No | Output video length (choices: 5, 10). |

img_url* | image_uri | Yes | First-frame reference image (supported formats: jpg, jpeg, png, bmp, webp; 360–2000 px). |

resolution | string | No | Output resolution (480P, 720P, 1080P). |

negative_prompt | string | No | Optional text to suppress unwanted styles or artifacts. |

> Required parameters are marked with *.

Pricing on RunComfy is usage-based and depends on resolution and video length.

| Resolution | Cost |

|---|---|

| 720p+ | $0.05 per second |

| 1080p+ | $0.08 per second |

1) Prepare Reference Inputs

- Select a short reference video that represents the desired motion or subject behavior.

- Choose a clear first-frame image for img_url.

2) Upload to RunComfy

- Upload the reference video and image in the RunComfy Models or via API.

3) Write the Prompt

- Describe what should follow the reference and what should change (style, environment, camera, mood).

4) Optional: Add a Negative Prompt

- Exclude artifacts, unwanted styles, or visual noise if needed.

5) Select Output Settings

- Choose the desired resolution and duration.

6) Generate the Video

- Run the model and preview the output directly in the Models.

7) Iterate or Download

- Refine prompts or references and regenerate, or download the final video.

Create fluid, expressive animations with multi-shot storytelling features.

Refined AI visuals, real-time control, and pro FX for creators

Master complex motion, physics, and cinematic effects.

Create rapid high-quality video drafts with precise style and speed

Create camera-controlled, audio-synced clips with smooth multilingual scene flow for design pros.

Transform static visuals into cinematic motion with Kling O1's precise scene control and lifelike generation.

Wan 2.6 currently outputs up to 1080p resolution at 24fps and supports multiple aspect ratios including 16:9, 9:16, and 1:1. Across its capabilities, longer durations are achieved through multi-shot narrative chaining, and the system is optimized for short-to-moderate clips typically ranging from 5 to 10 seconds per segment when applicable to motion content.

To move from trial to production, prototype your Wan 2.6 prompts in the RunComfy Models, then apply the same parameters within the RunComfy API by retrieving your API access key from your dashboard. The Wan 2.6 endpoints mirror playground behavior, ensuring that production results match your web UI tests. Integration examples and rate-limit information are available in the official API documentation.

Compared to Wan 2.5, Wan 2.6 offers enhanced motion stability, stronger character consistency, better lip-sync accuracy, and native audio generation where applicable. The overall pipeline with Wan 2.6 is more stable with improved temporal coherence and finer detail handling, resulting in smoother and more realistic visual and multimodal outputs.

Wan 2.6 differentiates itself with integrated audio-visual synchronization, precise lip-sync, and reference-guided motion transfer across tasks. Unlike some competitors that may require separate audio production steps, Wan 2.6 produces synchronized audio and visuals in a single pass, making it a more integrated tool for diverse production environments.

Yes, Wan 2.6 supports multilingual inputs and outputs, automatically generating localized audio content and lip-synced speech across supported languages. This multilingual capability extends through its multimodal modes, enabling globalized storytelling from a single interface.

Wan 2.6 includes native audio generation for voiceovers, background music, and sound effects aligned with generated visuals or other content forms. The model’s multimodal architecture ensures precise audio-video synchronization without manual sound design intervention during conversion processes.

Wan 2.6 excels in short-form visual storytelling such as ads, social media clips, explainers, product spots, and training content. Its flexible architecture allows creators to build coherent multi-sequence narratives with consistent characters, motion, and audio across scenes, ideal for marketing, e-learning, and interactive use cases.

RunComfy is the premier ComfyUI platform, offering ComfyUI online environment and services, along with ComfyUI workflows featuring stunning visuals. RunComfy also provides AI Models, enabling artists to harness the latest AI tools to create incredible art.