Refine texture, geometry, and lighting with chrono-edit upscaler for realistic image upscaling.

| Parameter | Type | Default/Range | Description |

|---|---|---|---|

| prompt | string | default: "" | Required. Instruction text describing the generation or the edit to apply. |

| image_urls | array[string] | default: [] | One or more image URLs to use as sources or references for image-to-image edits. |

| Parameter | Type | Default/Range | Description |

|---|---|---|---|

| image_size | string (enum) | auto, 1024x1024, 1536x1024, 1024x1536 (default: auto) | Target aspect/size. Use auto to let the model choose; specify exact dimensions for square, landscape, or portrait. |

| background | string (enum) | auto, transparent, opaque (default: auto) | Background handling. Transparent enables export-ready assets; opaque keeps a solid background. |

| quality | string (enum) | low, medium, high (default: high) | Rendering quality/performance tradeoff. High emphasizes fidelity. |

| input_fidelity | string (enum) | low, high (default: high) | Degree to preserve content from the first input image; high maintains stronger likeness and layout. |

| Parameter | Type | Default/Range | Description |

|---|---|---|---|

| output_format | string (enum) | jpeg, png, webp (default: png) | Output file format. Use PNG for transparency, JPEG for smaller size, WEBP for modern compression. |

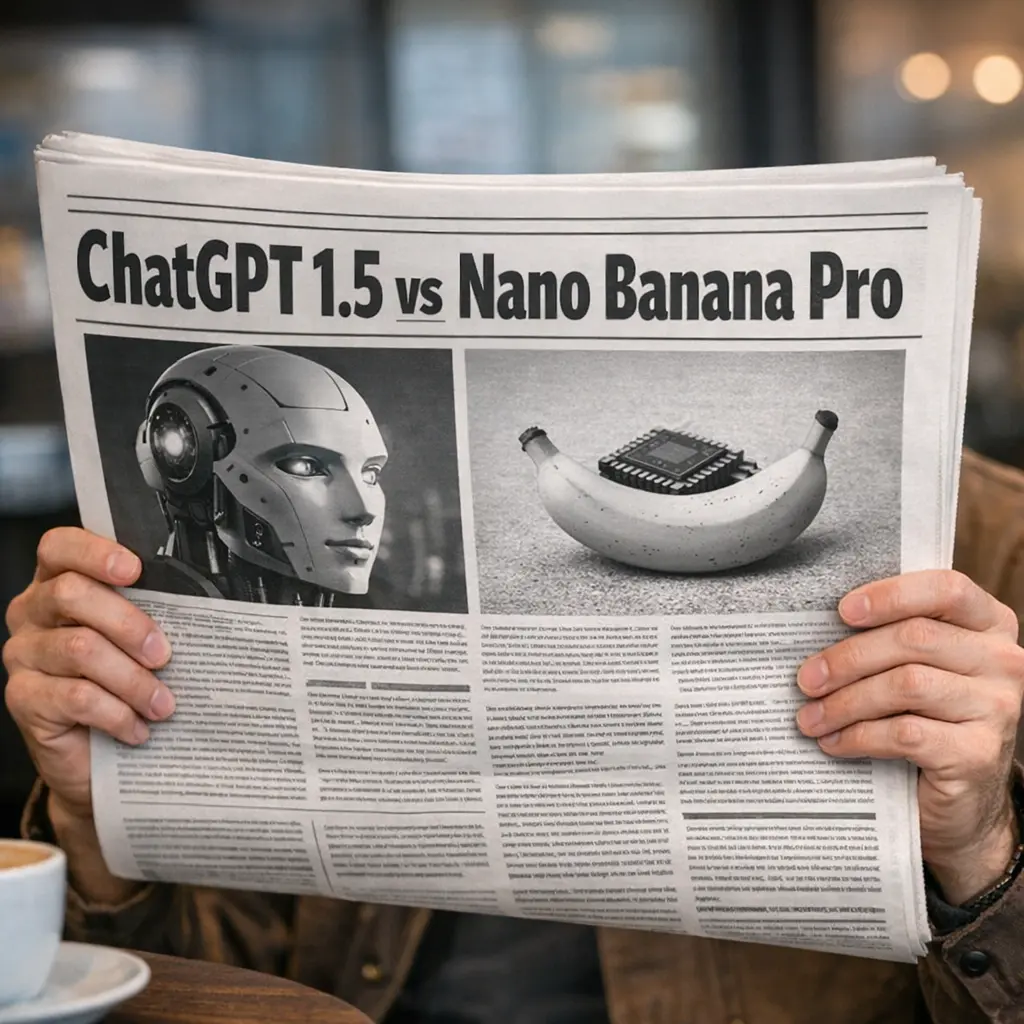

- GPT Image 1.5 focuses on strong instruction-following, fast iteration, and precise image editing (OpenAI claims up to 4× faster generation).

- Nano Banana Pro emphasizes studio-style control, higher output resolution (up to 4K), multi-reference composition (up to 14 images), and optional Search grounding for factual visuals.

gpt-image-1.5 (snapshot: gpt-image-1.5-2025-12-16), marketed in ChatGPT as ChatGPT Images.gemini-3-pro-image-preview.2.3.1 GPT Image 1.5 (OpenAI)

Limits & Formats

Workflow Characteristics

2.3.2 Nano Banana Pro (Gemini 3 Pro Image)

Limits & Formats

Workflow Characteristics

Common Praise

Common Criticism

Common Praise

Common Criticism

These results are based on Microsoft’s internal datasets and evaluation criteria.

Refine texture, geometry, and lighting with chrono-edit upscaler for realistic image upscaling.

Create reliable, studio-grade visuals with precise color and layout control.

High-speed image transformation with precision lighting and bilingual prompt support.

Turn written concepts into detailed visuals with precise image synthesis for creative teams.

Generate and edit images from prompts and photos with OpenAI GPT-4o Image.

Change an image’s aspect ratio cleanly with Ideogram 3 Reframe.

GPT Image 1.5 can create original visuals from text or modify existing images using image-to-image workflows. It excels in preserving fine details, lighting, and texture across multiple edits, offering up to 4× faster generation compared to GPT Image 1. This makes it ideal for creative professionals who need consistency and realism in iterative edits.

Compared to GPT Image 1, GPT Image 1.5 introduces improved prompt adherence, more realistic lighting and composition, and richer texture handling in image-to-image transformations. It also provides smoother iterative editing and better text fidelity, which helps developers and technical artists retain visual consistency through complex editing workflows.

GPT Image 1.5 currently outputs up to 1024×1024 pixels (about 1 MP) for most aspect ratios, with prompt token limits near 1000 tokens. It accepts one reference image per image-to-image edit. Developers needing multiple reference compositing should combine them manually before upload or consider alternate workflows.

Yes. GPT Image 1.5 supports square (1:1), landscape (16:9), and portrait (9:16) ratios. Nonstandard aspect ratios are auto-cropped or padded. Supported formats include PNG and JPEG for both input and output in image-to-image editing sessions.

Once your prototype using GPT Image 1.5 works as expected in the RunComfy Playground, you can migrate by using the RunComfy API, which mirrors the playground’s parameters, including image-to-image calls. You’ll authenticate with your API key, use the ‘generation’ endpoint, and manage usd credits or paid tiers for production-level scalability.

GPT Image 1.5 stands out for its balanced blend of image quality, speed, and consistency across edits. While rivals like Flux 2 may offer higher resolution, GPT Image 1.5 provides more stable identity preservation, coherent lighting, and semantic prompt accuracy—especially useful in image-to-image editing scenarios for commercial applications.

Yes. GPT Image 1.5 improves legibility of small or dense text elements embedded in generated graphics. When performing image-to-image edits involving logos or signage, the model retains crisp outlines and consistent font rendering, surpassing GPT Image 1 and many competing systems in text fidelity.

In general, you may use GPT Image 1.5 outputs commercially, but always confirm the applicable licensing terms on the official OpenAI platform or RunComfy policy pages. Commercial workflows involving image-to-image editing should verify output rights and data policies, as these may differ depending on API integration modes.

GPT Image 1.5 employs advanced internal representation tracking that preserves facial likeness, textures, and lighting consistency over successive edits. This helps developers or technical artists perform multi-stage image-to-image transformations such as character or product retexturing without introducing visual drift.

Yes. Efficient prompting and batching can reduce usd consumption in RunComfy’s GPT Image 1.5 API. Reusing masked edits for image-to-image tasks instead of full regenerations preserves credits and lowers processing costs while maintaining control over fine visual adjustments.

RunComfy is the premier ComfyUI platform, offering ComfyUI online environment and services, along with ComfyUI workflows featuring stunning visuals. RunComfy also provides AI Models, enabling artists to harness the latest AI tools to create incredible art.