1. What is ToonCrafter?

ToonCrafter is an advanced AI technique that interpolates between two cartoon images using pre-trained image-to-video diffusion priors. This allows ToonCrafter to generate interpolated videos from two distinct cartoon images, creating a seamless transition between them. It supports video generation of up to 16 frames with a resolution of 512x320 pixels.

2. How ToonCrafter works?

ToonCrafter is an AI tool designed to create smooth animations from static cartoon images using advanced AI techniques. It employs Latent Diffusion Models (LDMs) to encode images into a compressed latent space, where noise is added and then progressively removed through a denoising process. This method generates intermediate frames between the original images, resulting in fluid animations.

A notable feature of ToonCrafter is its Toon Rectification Learning. This process adapts the AI model, originally trained on live-action videos, to understand and generate cartoon animations. By fine-tuning the model with a large dataset of high-quality cartoon videos, ToonCrafter learns the unique motion and stylistic elements of cartoons, such as exaggerated movements and simpler textures.

ToonCrafter also incorporates a Detail Injection and Propagation mechanism. This uses a dual-reference-based 3D decoder to maintain the visual fidelity of the generated frames. The decoder analyzes and injects pixel-level details from the input frames into the new frames, ensuring consistency with the original artwork and preventing visual artifacts.

Additionally, ToonCrafter offers sketch-based controllable generation, allowing animators to provide sketches that guide the creation of intermediate frames. This feature gives artists more control over the animation process, enabling them to specify particular poses or movements and ensuring that the final animation aligns with their vision.

3. How to Use ComfyUI ToonCrafter

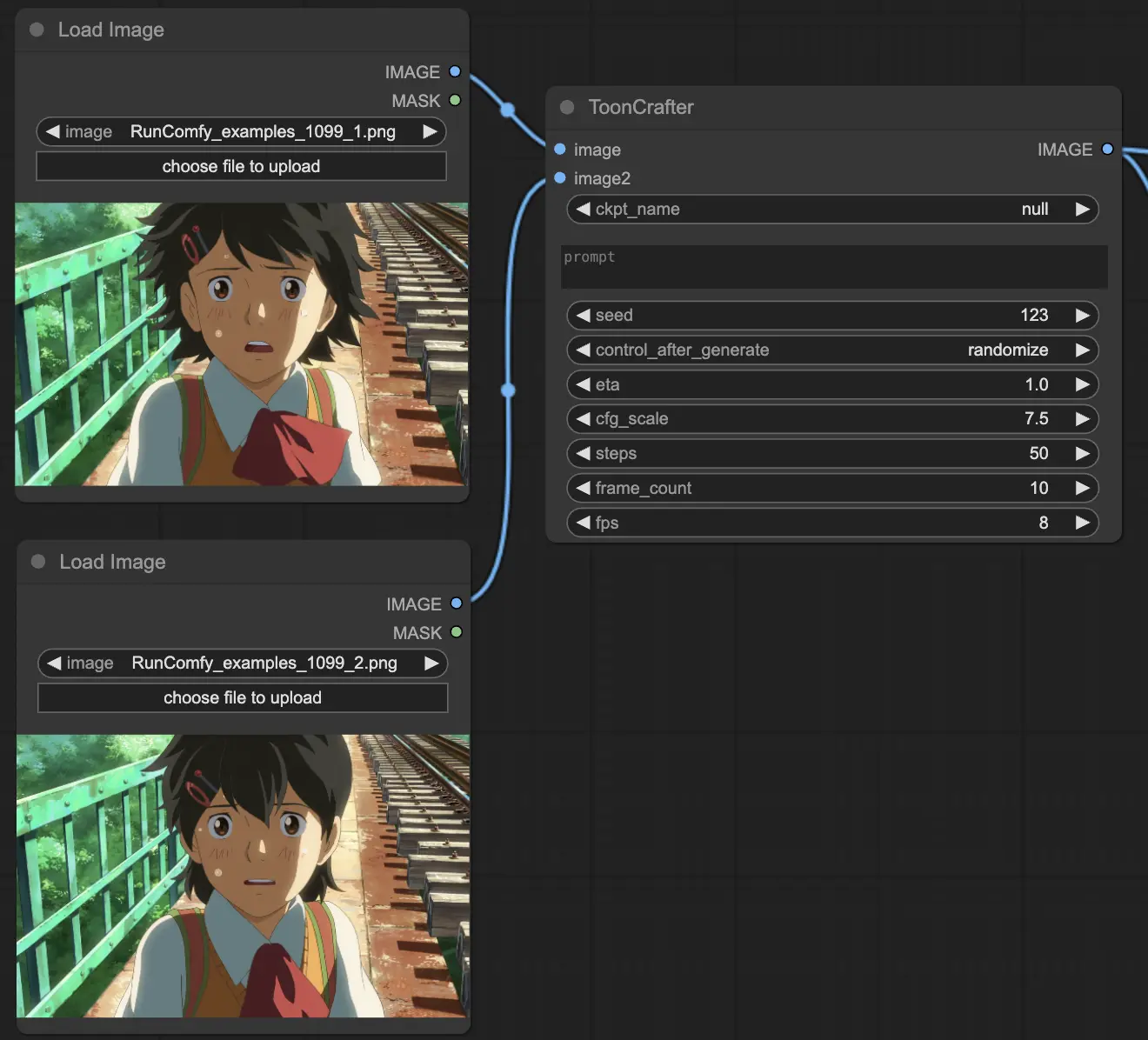

ComfyUI ToonCrafter Node: Input Parameters

The ToonCrafter node requires several input parameters that determine the behavior and output of the interpolation process. Here's a detailed explanation of each parameter:

- image: The first input image (type: IMAGE).

- image2: The second input image (type: IMAGE).

- ckpt_name: The name of the checkpoint to use (type: STRING, options: list of available checkpoints).

- prompt: A textual description to guide the interpolation (type: STRING, supports multiline and dynamic prompts).

- seed: A seed value for random number generation to ensure reproducibility (type: INT, default: 123).

- eta: The parameter controls the scale of the noise added during the diffusion process. In diffusion models, noise is gradually reduced to generate the final image or frame. Adjusting the eta value determines how much noise is introduced at each iteration of this process. (type: FLOAT, default: 1.0, range: 0.0 to 15.0, step: 0.1).

- cfg_scale: The classifier-free guidance scale (type: FLOAT, default: 7.5, range: 1.0 to 15.0, step: 0.5).

- steps: Number of diffusion steps (type: INT, default: 50, range: 1 to 60, step: 1).

- frame_count: The number of frames to generate (type: INT, default: 10, range: 5 to 30, step: 1).

- fps: Frames per second for the output video (type: INT, default: 8, range: 1 to 60, step: 1).

ComfyUI ToonCrafter Node: Output Parameters

The output of the ToonCrafter node is a sequence of interpolated frames, which can be used to create a video. Here's what you can expect:

- IMAGE: The generated frames of the interpolated video. These frames are returned as a tensor and can be further processed or saved as a video file.