This ComfyUI Img2Vid workflow is created by Titto13 based on the exceptional work of ipiv. This workflow focusing on dynamic image generation and adjustment, consists of AnimateDiff LCM, IPAdapter, QRCode ControlNet, and Custom Mask modules. Each of these modules plays a crucial role in the Img2Vid process, enhancing the quality of morphing animation.

Core Components of the Img2Vid workflow for morphing animation

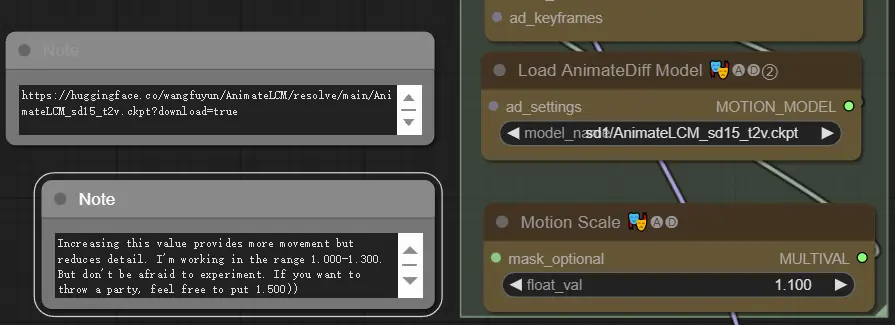

1. AnimateDiff LCM Module:

Integrates the AnimateLCM model into the AnimateDiff setup to accelerate the rendering process. AnimateLCM speeds up video generation by reducing the number of inference steps required and improves result quality through decoupled consistency learning. This allows the use of models that typically do not produce high-quality results, making AnimateLCM an effective tool for creating detailed animations.

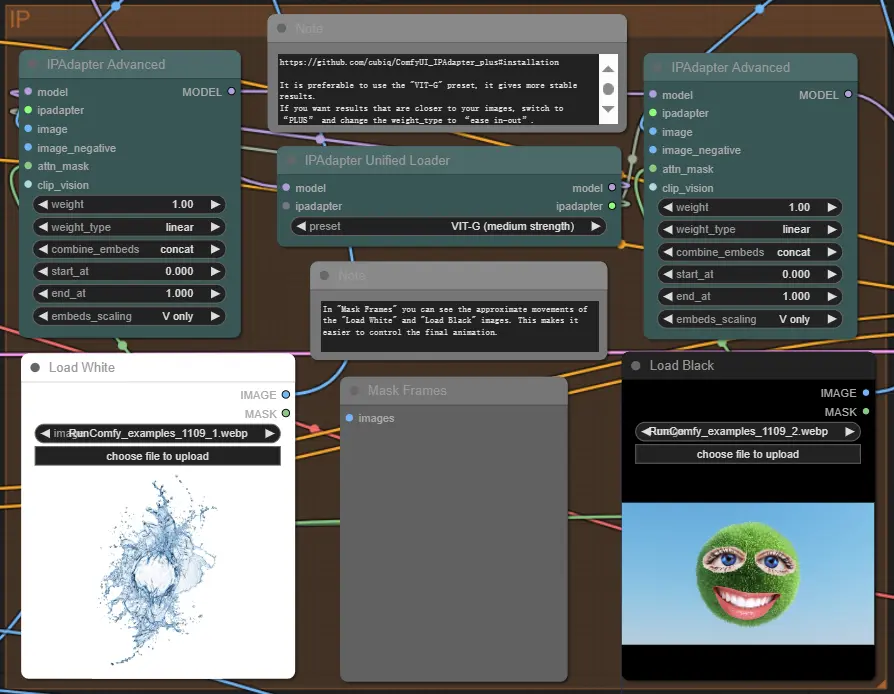

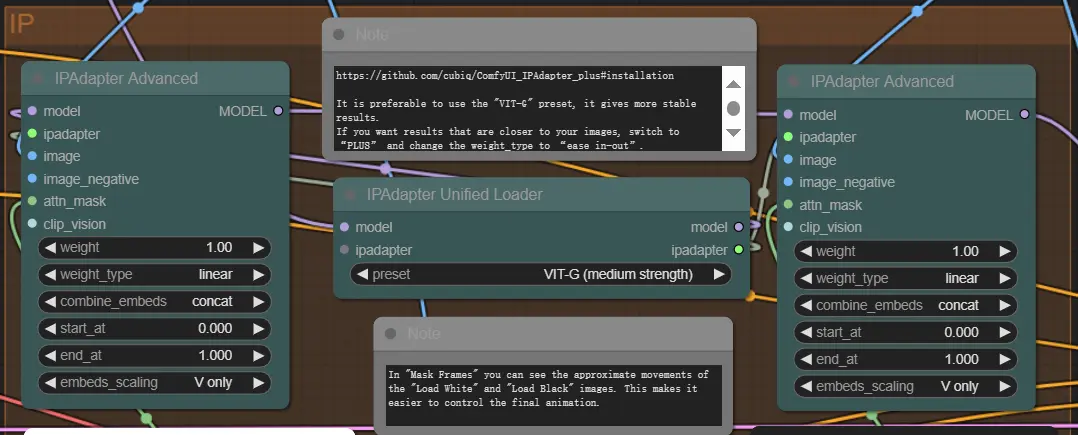

2. IPAdapter Module:

Utilizes the attention mask function of IPAdapter to achieve morphing between reference images. Users can generate dedicated attention masks for each image, ensuring smooth transitions in the final video.

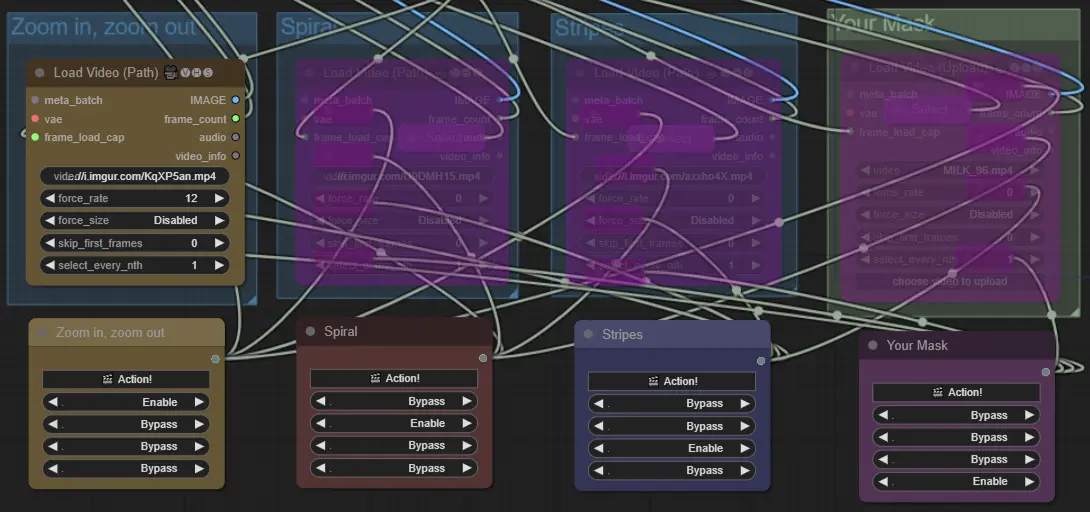

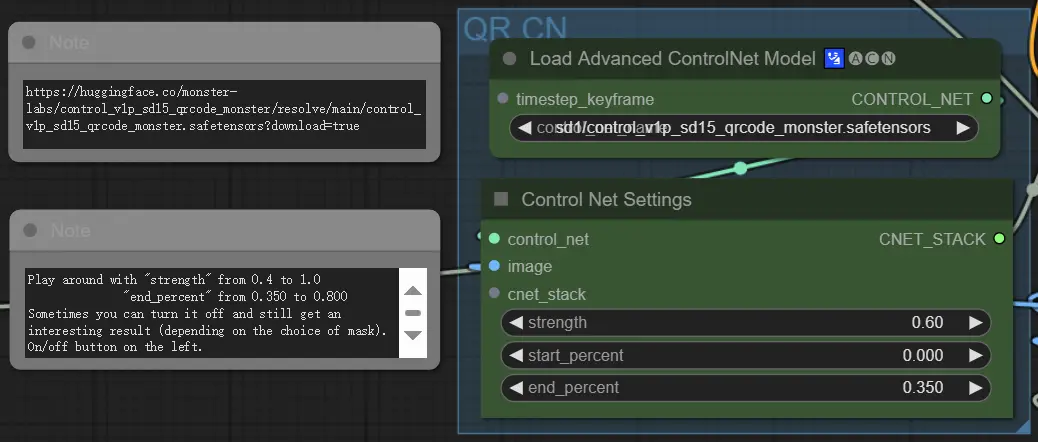

3. QRCode ControlNet Module:

Uses a black-and-white video as the input for the ControlNet QRCode model, guiding the animation flow and enhancing the visual dynamics of the morphing sequence.

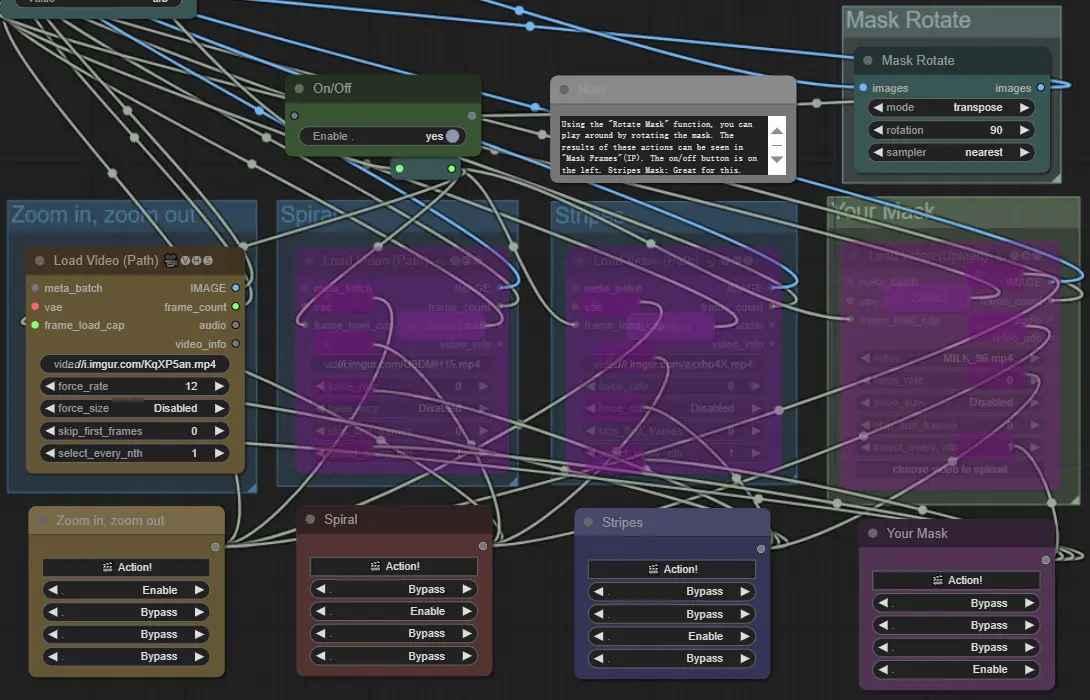

4. Mask Module:

Provides three preset masks and allows users to load custom masks. All these masks can be switched with a simple one-click operation to achieve various effects.

How to use the Img2Vid workflow to create morphing animations

1. Image Loading and Mask Application

- Image Loading: Load images into the "Load White" and "Load Black" nodes. The workflow includes various image masks that users can select based on their needs.

- Mask Processing: Masks can be selected by clicking "Action!" and custom masks can be uploaded and applied, enhancing flexibility.

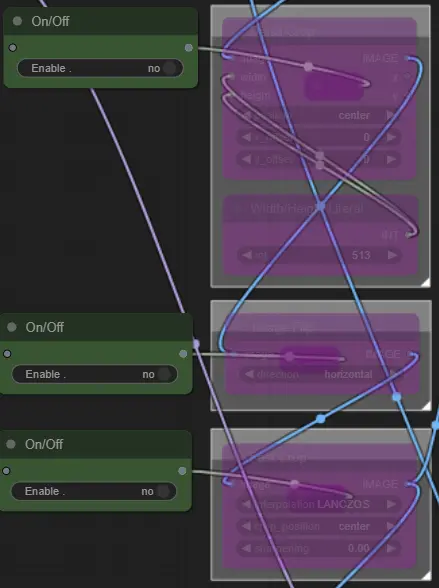

2. Image Adjustment

- Image Rotation、Cropping and Flipping: Adjust images using the "Rotate Mask" and "Flip Image" functions to achieve the desired effects.The "Fast Crop" function allows users to choose between center cropping or adding black borders to make images fit. The "Detail Crop" function enables cropping of specific details from images.(This feature gives you more control over your creations, so enable it if you need to!)

3. Parameter Adjustment

- AnimateDiff - Motion Scale: Adjusting this parameter changes the animation's fluidity. Increasing the value adds more movement but may reduce detail quality. The recommended range is 1.000-1.300, with experimentation encouraged.

- QRCode ControlNet - Strength and End Percent: These parameters control the animation's intensity and transition effect. Generally, adjust "Strength" between 0.4 and 1.0 and "End Percent" between 0.350 and 0.800.

- Mask - Force Rate: Set to "0" for initial speed or "12" for accelerated and doubled cycles. Adjust this value based on animation length and effect needs.

- IPAdapter - Preset: It is recommended to use the "VIT-G" preset for more stable results. For results closer to the original images, switch to the “PLUS” preset and set “weight_type” to “ease in-out.”

For more information and to view the original work, please visit the Civitai page of the author Titto13.