ComfyUI Wan 2.1 Workflow Description

1. What is Wan 2.1?

The ComfyUI Wan 2.1 workflow is a cutting-edge video generation pipeline that leverages the latest Wan 2.1 models to create high-quality videos from text prompts or/and base images. Wan 2.1 supports Text-to-Video (T2V) and Image-to-Video (I2V) generation, producing 5-second videos with natural motion and professional-grade quality. Wan 2.1 sets a new benchmark for AI video generation, outperforming open-source and commercial alternatives. The Wan 2.1 14B model pushes the limits further, delivering exceptional results up to 720P.

2. Benefits and Capabilities of Wan 2.1

- High-quality output: Generates 480P to 720P videos with realistic motion and high-fidelity textures.

- Hardware accessibility: The lightweight Wan 2.1 1.3B model requires only 8.19GB VRAM, making it compatible with most modern GPUs (which are provided by RunComfy here!).

- Versatile generation: Wan 2.1 Supports both Text-to-Video (T2V) and Image-to-Video (I2V) workflows.

- Multilingual support: Wan 2.1 is the first video model capable of generating both Chinese and English text within videos.

- VAE efficiency: The Wan-VAE backend efficiently handles 1080P videos while preserving temporal consistency.

- Fast processing: The Wan 2.1 1.3B model delivers quick results while maintaining quality.

3. How to Use Wan 2.1

3.1 Wan 2.1 Generation Methods

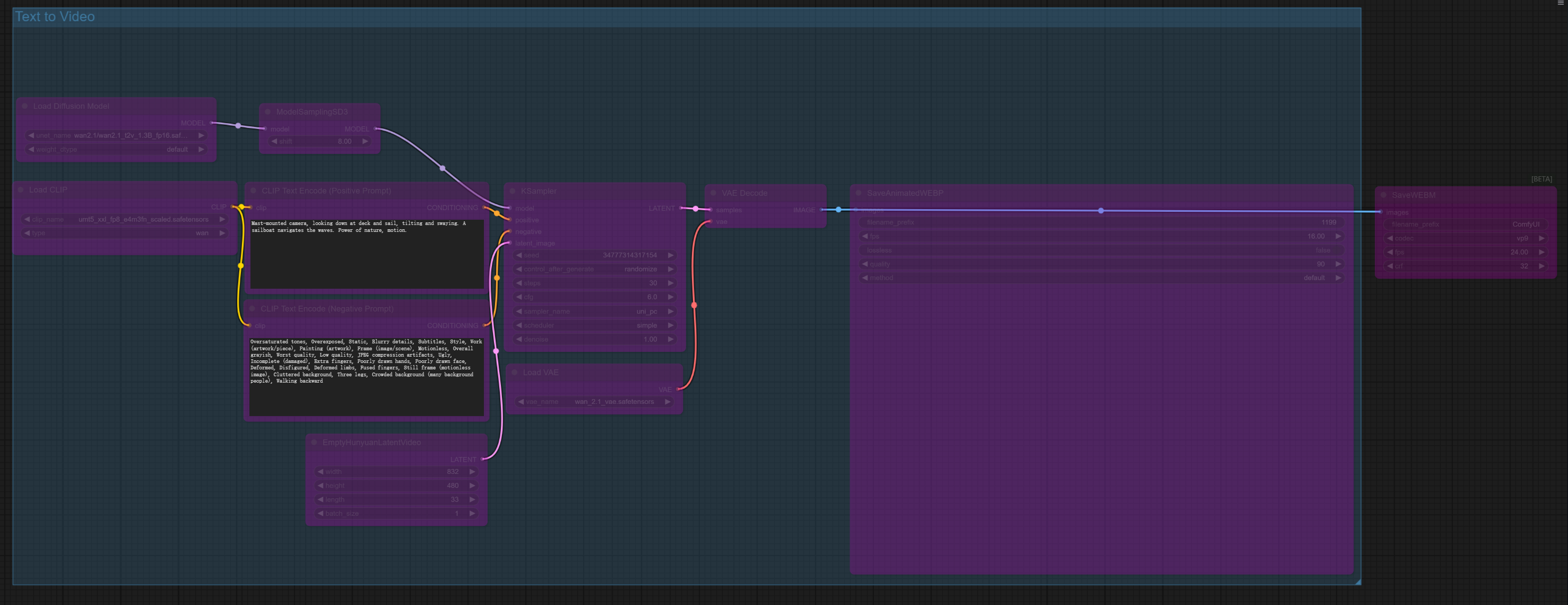

Primary Wan 2.1 Generation Method (disabled by default): Text-to-Video

- Inputs: Text prompt

- Best for: Creating videos from scratch using textual descriptions

- Characteristics:

- Uses the Wan 2.1 1.3B model for faster generation

- Creates 33-frame (5-second) videos at 480P resolution

- Optimized for smooth motion in short clips

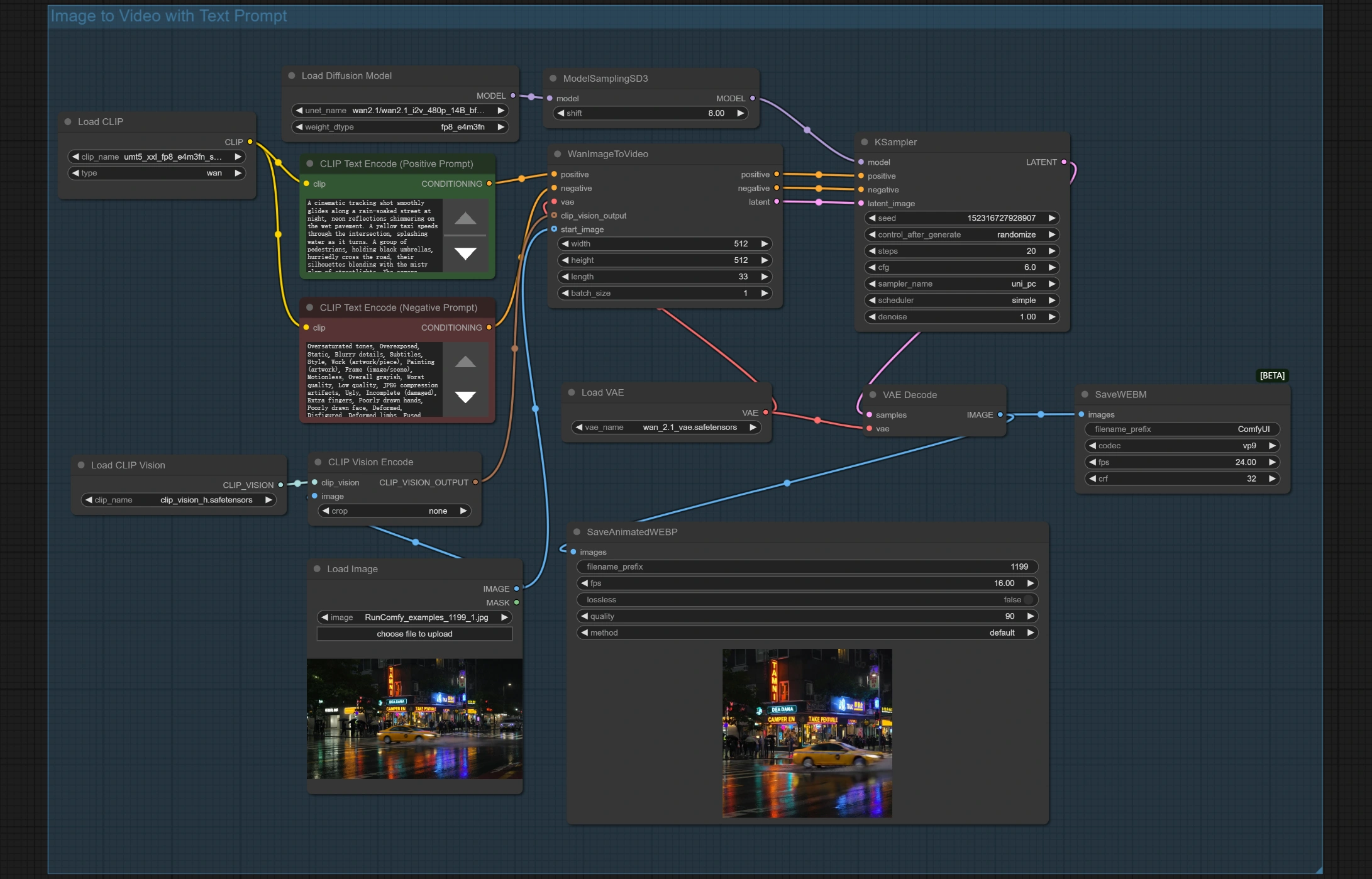

Advanced Wan 2.1 Method (enabled by default): Image-to-Video with Text Prompt

- Inputs: Base image + text prompt

- Best for: Animating still images while guiding motion with a prompt

- Characteristics:

- Preserves visual elements of the input image

- Allows text control over motion direction

- Uses the Wan 2.1 14B model for higher fidelity

- Creates 33-frame videos at 512x512 resolution

Example Workflow:

- In CLIPTextEncode (Positive Prompt / Negative Prompt): Enter your scene description (e.g., "a fox moving quickly in a beautiful winter landscape with trees and mountains during daytime, tracking camera").

- In Load Image: Upload your base image.

- For further refinement (optional):

- In KSampler: Adjust

steps(default: 30) for a quality vs. speed balance. - In ModelSamplingSD3: Modify

scalevalue (default: 8) for prompt adherence.

- In KSampler: Adjust

- Click Queue Prompt to start the generation.

- In SaveAnimatedWEBP find your output preview (also saved in ComfyUI > Output folder).

3.2 Parameter Reference for Wan 2.1

- KSampler:

steps: 20-30 (higher values improve quality but increase time)cfg: 6.0 (controls prompt adherence strength)scheduler: "simple" (determines noise scheduling approach)sampler_name: "uni_pc" (recommended sampler for Wan 2.1) <p align="center"> <img src="https://cdn.runcomfy.net/workflow_assets/1199/readme03.webp" alt="Wan 2.1" width="350"/> </p>

- WanImageToVideo:

width/height: 512 (output resolution)length: 33 (frames per video)batch_size: 1 (number of videos per run)

- ModelSamplingSD3:

scale: 8 (controls guidance adherence)

- EmptyHunyuanLatentVideo:

width/height: 832/480 (T2V output resolution)length: 33 (frames per video)batch_size: 1 (number of videos per run) <p align="center"> <img src="https://cdn.runcomfy.net/workflow_assets/1199/readme04.webp" alt="Wan 2.1" width="350"/> </p>

3.3 Advanced Optimization with Wan 2.1

- Memory Optimization:

- Use the Wan 2.1 1.3B model for faster generation with lower VRAM requirements.

- Reduce resolution (e.g., 512x320) for quicker processing.

- Decrease frame count for shorter and faster renders.

- Quality Optimization:

- Use the Wan 2.1 14B model for higher-quality output.

- Increase KSampler steps to 30-40 for more refined results.

- Utilize Image-to-Video with a high-quality base image for the best fidelity.

More Information

For additional details on Wan 2.1, visit the Wan-Video GitHub repository.

Credits

The Wan 2.1 model was developed by the Wan Team, and the ComfyUI integration was created by the original developers. Full credit goes to these innovators for advancing AI-powered video generation.