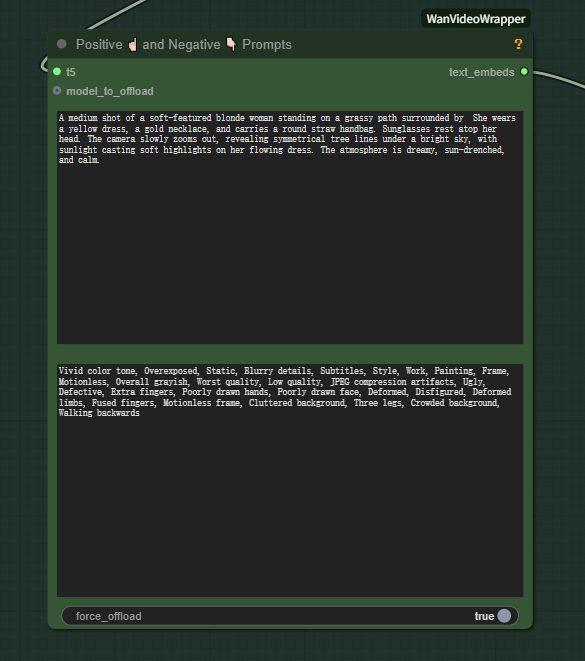

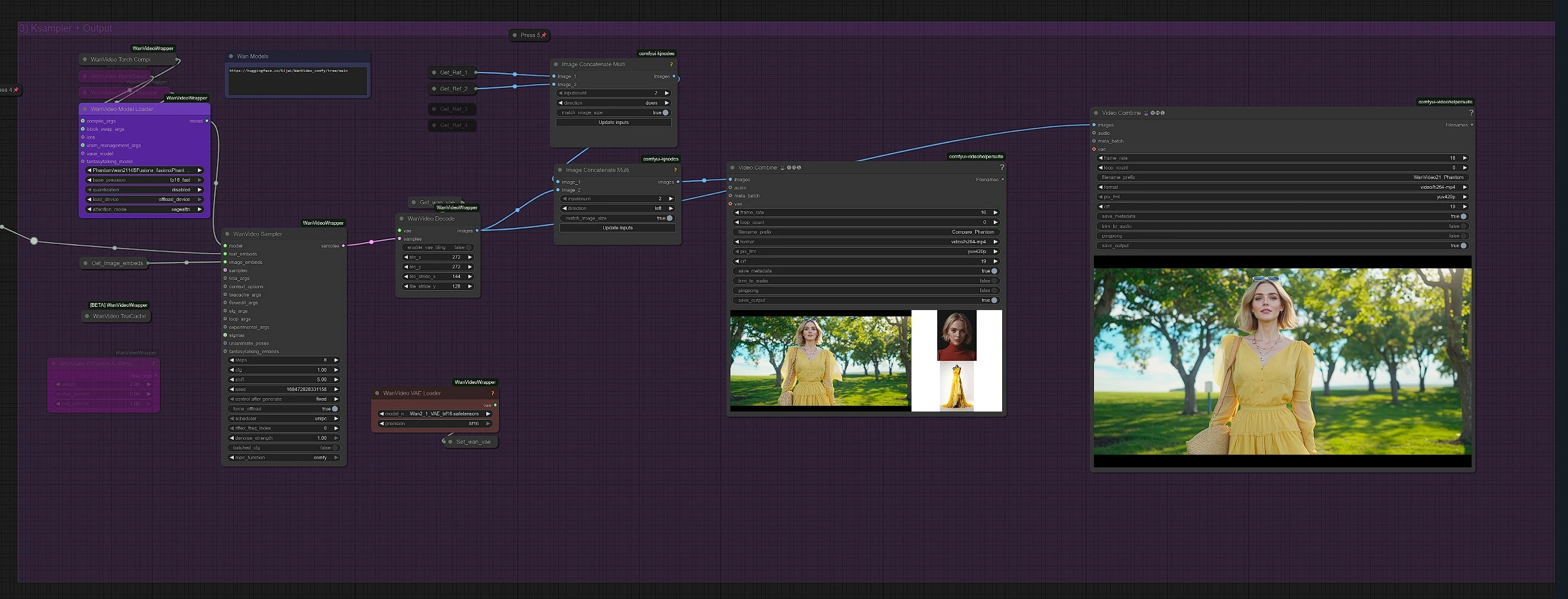

ComfyUI Phantom: Subject to Video

ComfyUI Phantom is a powerful consistent subjects to video generation model integrated into the ComfyUI workflow environment. This ComfyUI Phantom implementation enables high-quality, identity-consistent video synthesis from one or more reference images, guided by descriptive text prompts within the familiar ComfyUI interface.

Built upon advanced text-to-video and image-to-video architectures, ComfyUI Phantom specializes in generating human-centric motion while preserving subject identity. Through a unified joint text-image injection approach, ComfyUI Phantom achieves accurate cross-modal alignment—ensuring expressive, frame-consistent outputs that follow the structure and look of the provided references.

Why Use ComfyUI Phantom?

ComfyUI Phantom offers:

- Reference-Based Generation: Input one or more reference images to direct subject appearance in ComfyUI Phantom workflows

- Prompt + Image Control: Blend creative text descriptions with image fidelity using ComfyUI Phantom nodes

- Identity Preservation: ComfyUI Phantom maintains subject consistency across frames

- Multi-Subject Support: Generate videos with multiple subjects from reference inputs using ComfyUI Phantom

- ComfyUI Integration: Seamlessly integrates with existing ComfyUI workflows and custom nodes

- Ideal for Creators: Perfect for VTubers, stylized character creators, and narrative video artists using ComfyUI Phantom

Whether you're animating characters or generating reference-driven AI motion, ComfyUI Phantom gives you a flexible and powerful toolkit for visual storytelling within the ComfyUI ecosystem.

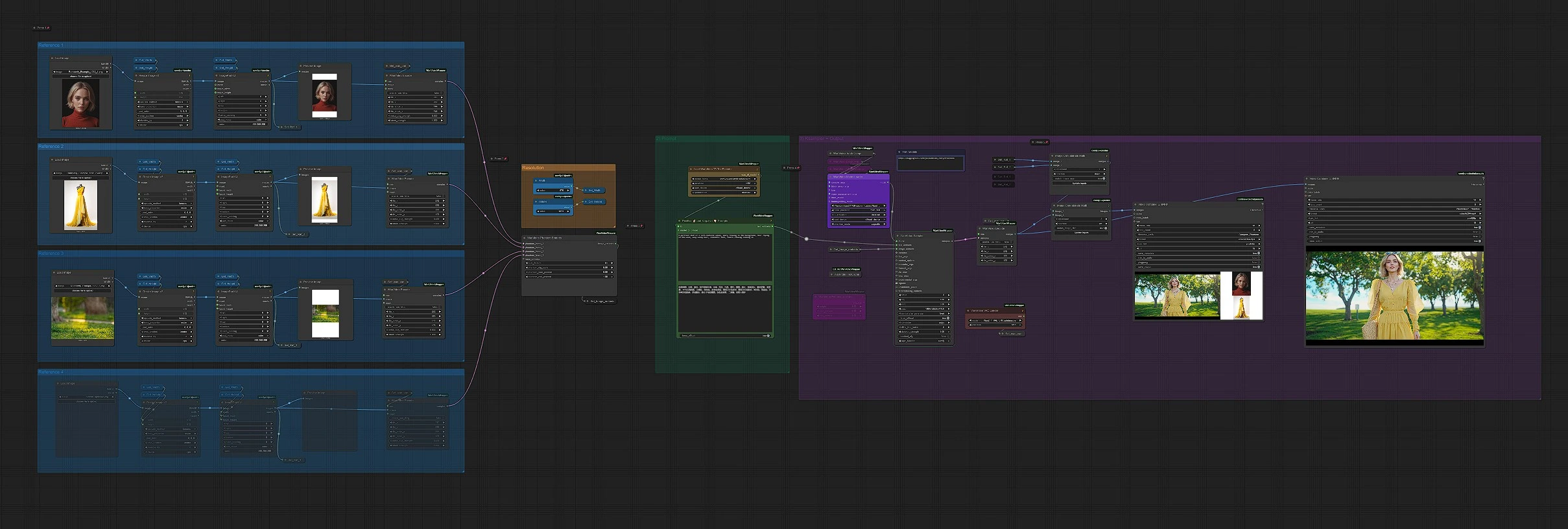

1 - References in ComfyUI Phantom

The first section handles reference uploading for your ComfyUI Phantom setup:

Load your driving reference image here in the ComfyUI Phantom workflow. You can upload max 4 reference images in their respective group. By default 2 are enabled in ComfyUI Phantom, you can enable 2 more by unmuting them.

You should also enable them in the Image concate multi node to see the comparison update in the compare video output.

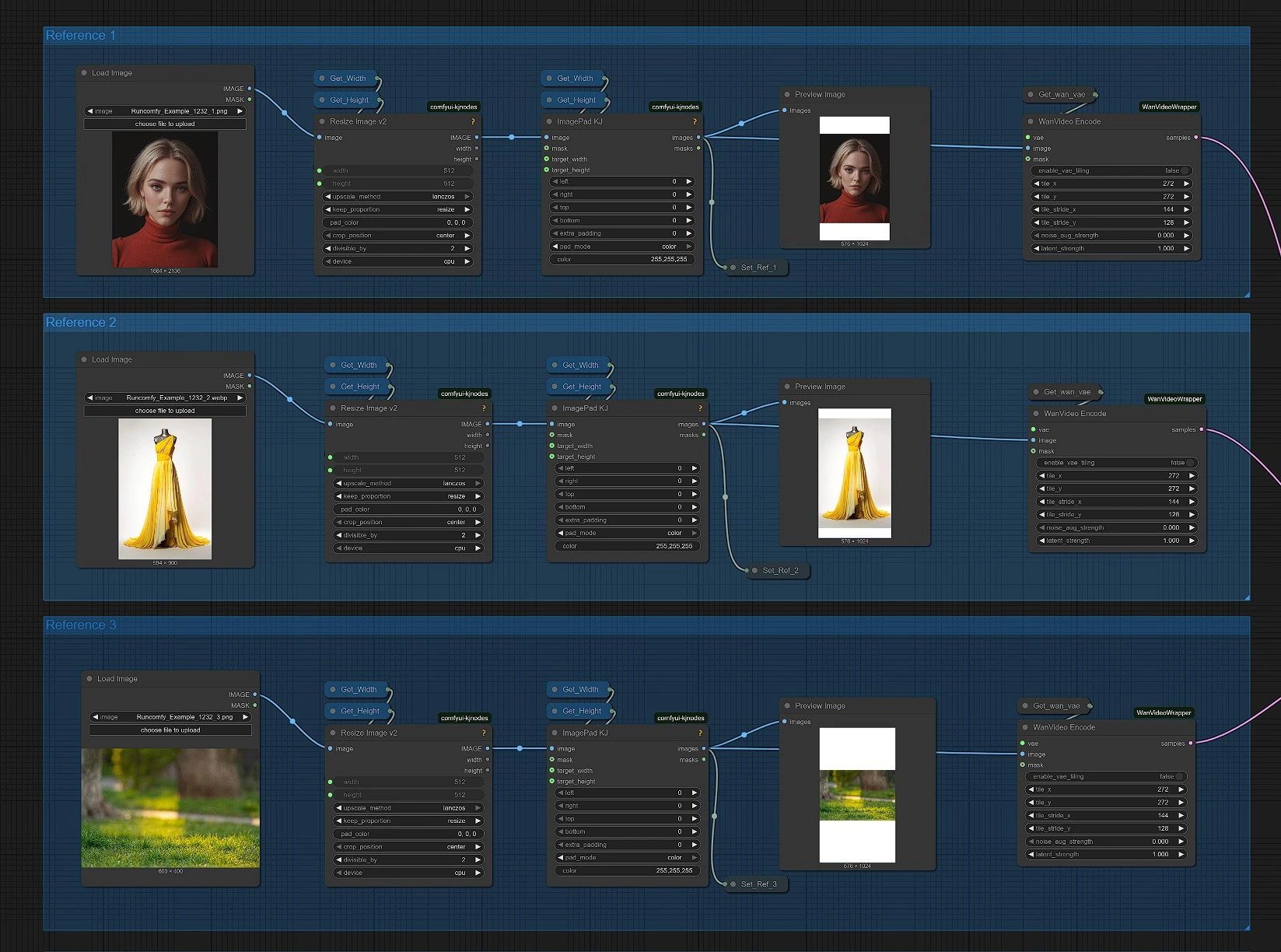

2 - Resolution and Duration Settings for ComfyUI Phantom

Enter your wan 2.1 compatible resolution and duration in frames in these ComfyUI Phantom nodes.

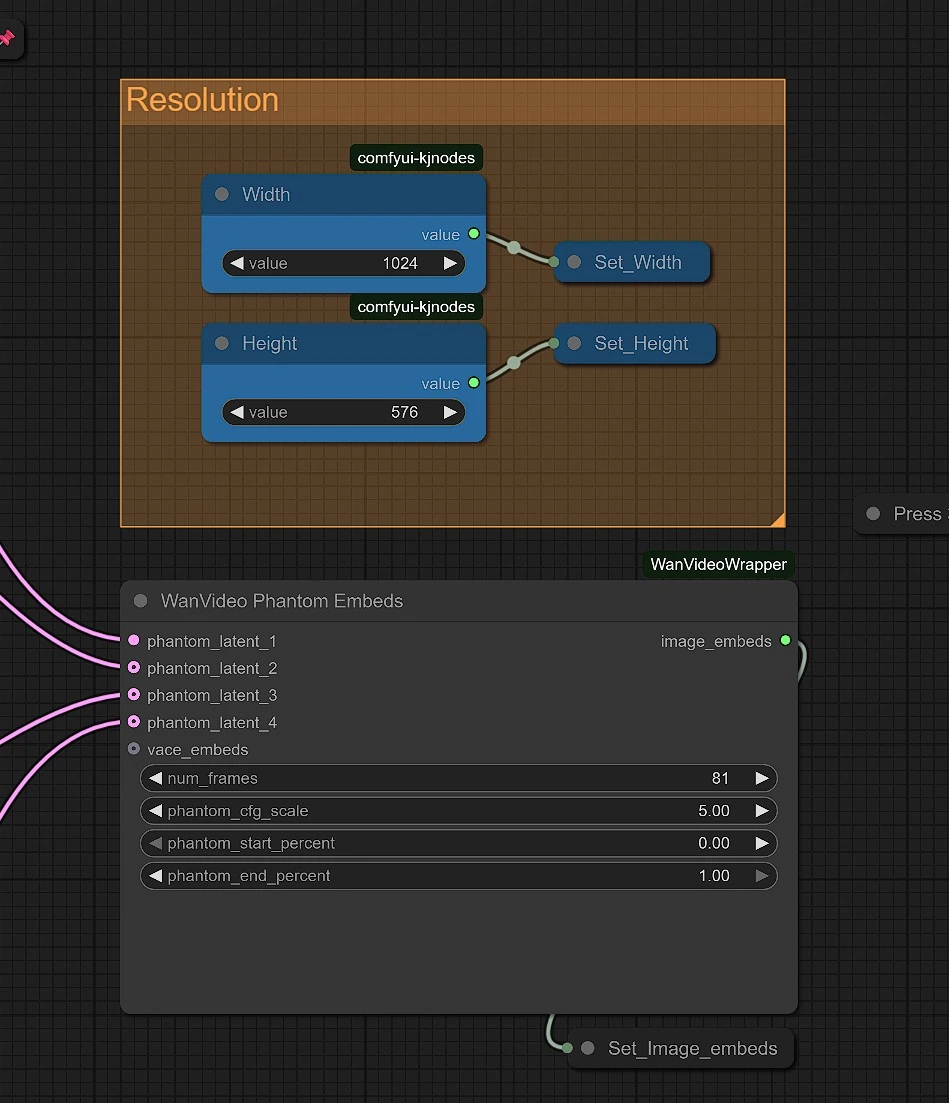

3 - Prompts Configuration in ComfyUI Phantom

Enter your prompts for ComfyUI Phantom video generation:

- Positive Prompt: Describe what you want Phantom to generate which also matches the content of the uploaded reference image

- Negative Prompt: Describe what Phantom should avoid (e.g., "blurry, low quality, artifacts")

4 - KSampler & Output in ComfyUI Phantom

- Sampler Settings: Choose sampler type (e.g., DPM++, Euler, etc.), steps, and seed for Phantom generation

- Output: Generated image will appear in the Phantom output viewer and saved in the output folder

- Load ComfyUI Phantom Model: In the Phantom Model selector node, you can choose between the 1.3B or the 14B ComfyUI Phantom model

Rendered video will be saved in the outputs folder in your ComfyUI installation.

ComfyUI Phantom Workflow Benefits

ComfyUI Phantom provides several advantages for video generation:

- Node-Based Interface: Leverage ComfyUI's intuitive node system for ComfyUI Phantom workflows

- Workflow Customization: Modify and extend ComfyUI Phantom workflows to suit specific needs

- Parameter Control: Fine-tune ComfyUI Phantom generation with precise parameter adjustments

- Batch Processing: Process multiple reference images efficiently with ComfyUI Phantom

- Community Support: Access shared ComfyUI Phantom workflows and community modifications

Acknowledgement

ComfyUI Phantom is built on top of the Wan 2.1 video generation model using the Wan Video Wrapper node system in ComfyUI. The core nodes and architecture were developed by kijai, enabling reference-based, ID-preserving video synthesis within ComfyUI. This ComfyUI Phantom workflow would not be possible without the foundational work behind Wan 2.1 and the custom ComfyUI tools that power it.

ComfyUI Phantom Model Information

- Source - Original Phantom Repo

- ComfyUI Implementation: https://huggingface.co/Kijai/WanVideo_comfy/tree/main

- Model Used in Workflow : https://civitai.com/models/1651125?modelVersionId=1878555

- Architecture: Multi-Input Reference for ComfyUI Phantom

- Model Location:

comfyui/models/diffusion_models - ComfyUI Compatibility: Fully integrated with ComfyUI workflow system