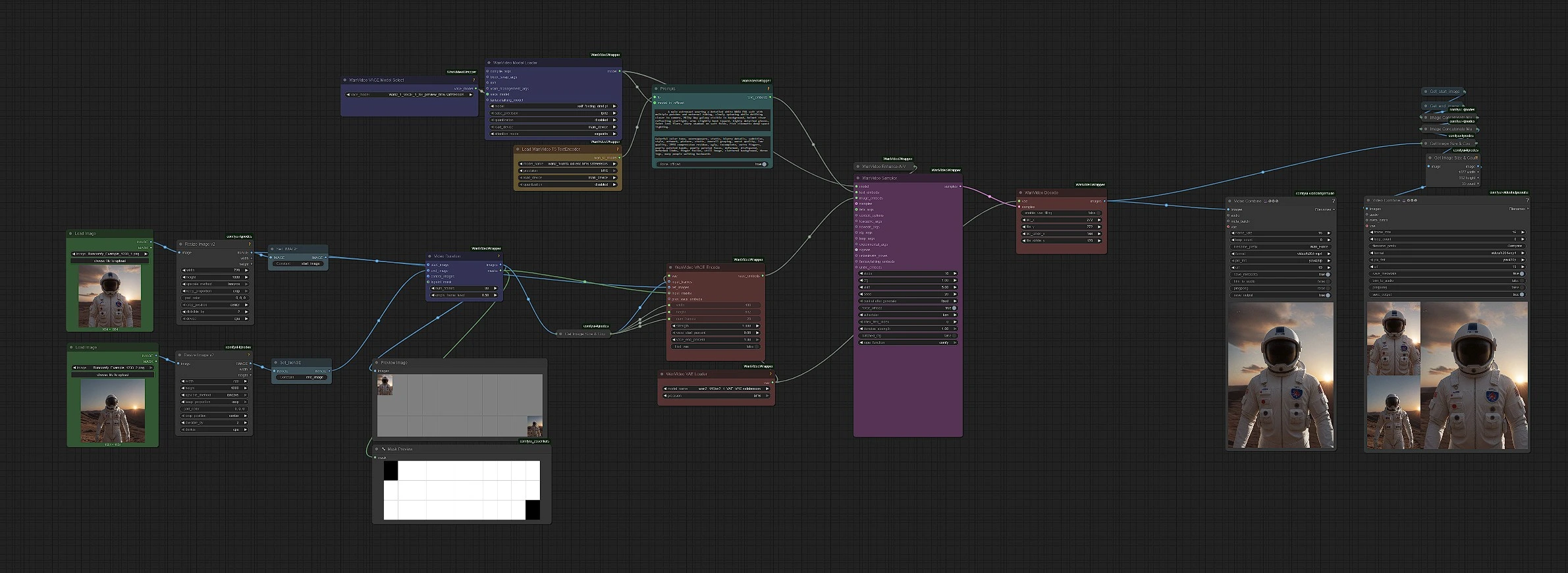

Self Forcing: Autoregressive Keyframe-to-Video Generation

Self Forcing is an advanced keyframe-driven video generation model. Self Forcing enables smooth, high-quality video synthesis by generating motion between a start and end keyframe, guided by descriptive text prompts.

Built upon autoregressive video diffusion architectures with KV caching, Self Forcing excels at generating temporally consistent, identity-preserving motion across frames. The Self Forcing joint keyframe-text approach allows for fluid transitions, while maintaining subject structure and style throughout the generated video.

Why Use Self Forcing?

Self Forcing offers:

- Keyframe-Based Generation: Self Forcing uses start and end reference images to control appearance and motion

- Prompt + Keyframe Control: Self Forcing blends creative text descriptions with reference structure

- Autoregressive Motion: Self Forcing provides smooth, temporally consistent transitions between frames

- Identity Preservation: Self Forcing maintains subject fidelity across generated sequences

- Ideal for Streamlined Video Creation: Self Forcing is perfect for character-driven storytelling, cinematic animation, and concept video synthesis

Whether you're generating animations, cinematic sequences, or identity-consistent AI videos, Self Forcing gives you full creative control while ensuring smooth and realistic motion with Self Forcing technology.

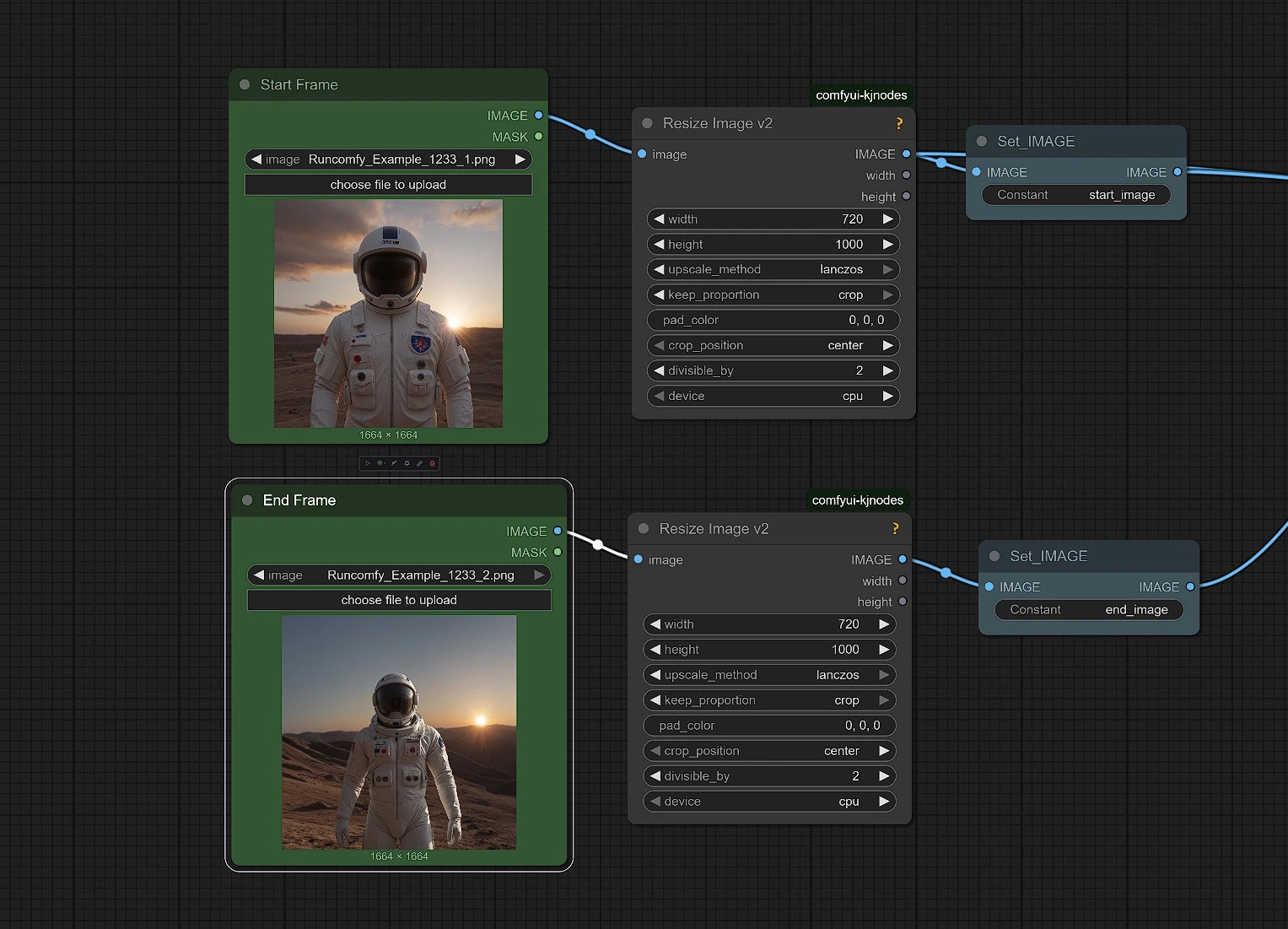

Input Images

In this section, you will upload your Start Keyframe and End Keyframe images for Self Forcing. These two images define the beginning and ending appearance of your Self Forcing generated video.

- Upload both reference images using the provided Load Image nodes for Self Forcing.

- Use optional Resizing and Cropping nodes to adjust your images for optimal Self Forcing alignment and aspect ratio.

- Properly aligned and well-cropped keyframes improve Self Forcing motion consistency throughout the generated sequence.

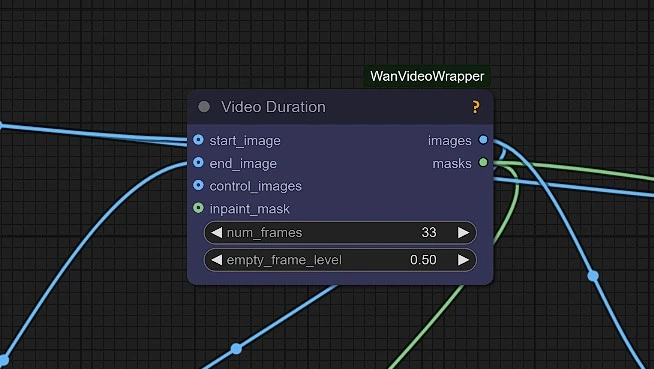

Video Duration

Set the total number of frames your Self Forcing video will generate.

- Longer frame counts allow for more gradual, fluid transitions between keyframes in Self Forcing.

- Shorter frame counts result in quicker Self Forcing transitions.

- Typical Self Forcing range: 16–48 frames depending on desired length and motion complexity.

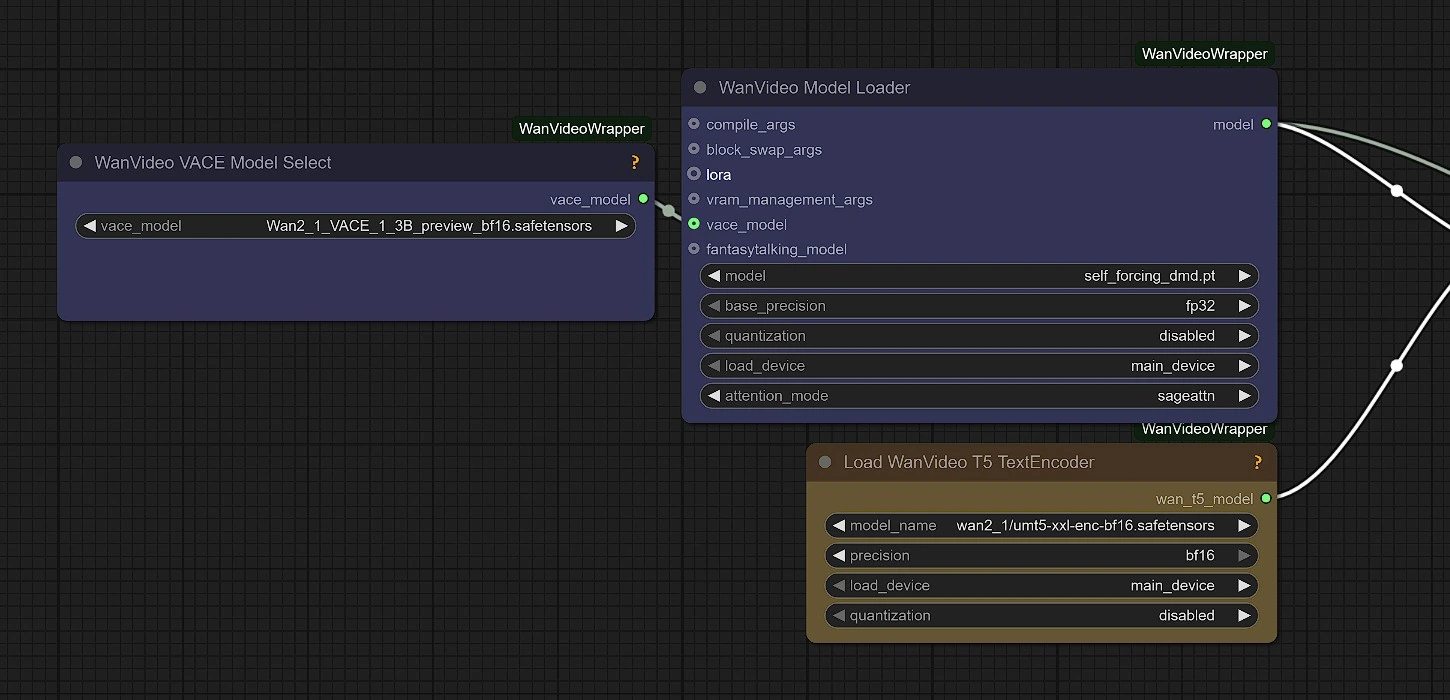

Model

This group loads the Self Forcing autoregressive video diffusion model. The Self Forcing workflow automatically selects the correct model version for you.

- Self Forcing is built on autoregressive rollout with KV caching.

- Self Forcing ensures stable, temporally coherent motion generation.

- Self Forcing allows real-time inference on high-end GPUs like RTX 4090.

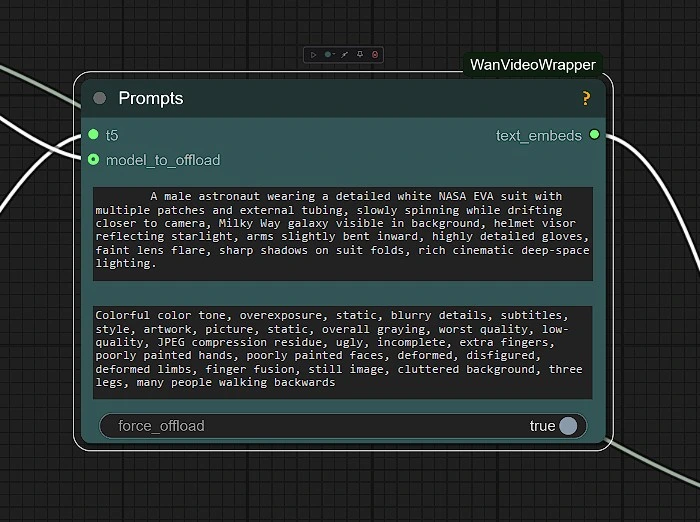

Prompts

In this section, you can enter your Text Prompt to guide the Self Forcing generation.

- Combine prompts with your keyframes to influence the Self Forcing style, background, or motion context.

- Use descriptive and clear language to maximize Self Forcing creative control.

- Negative prompts can also be used to suppress unwanted elements in Self Forcing.

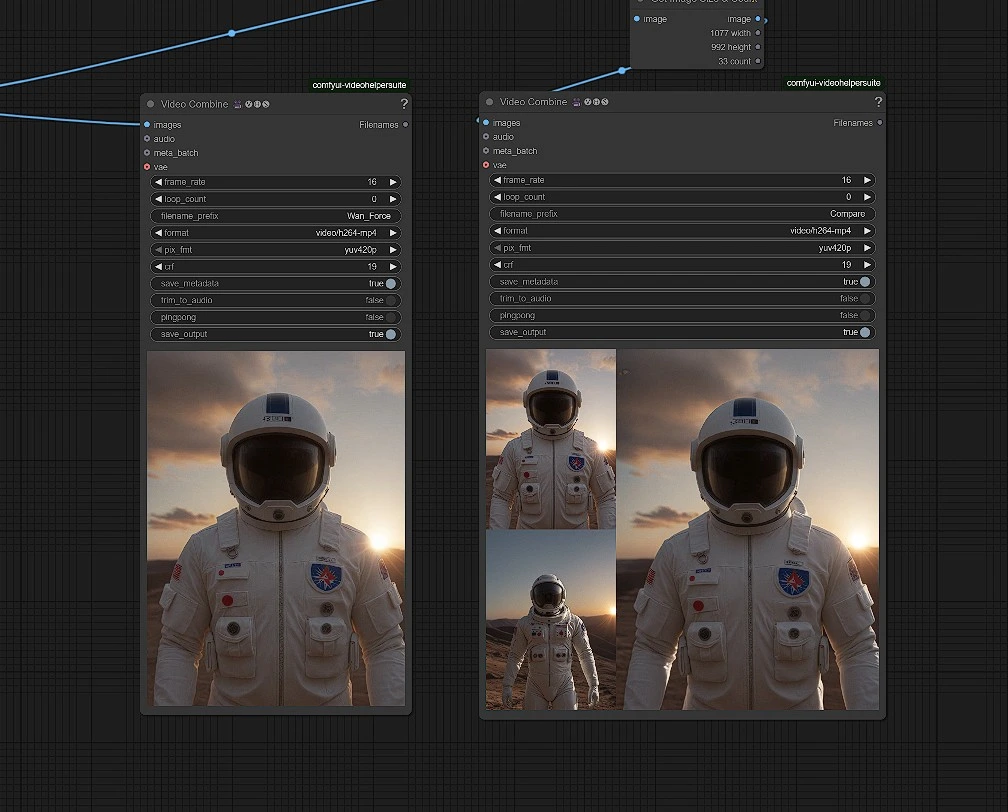

Outputs

Once Self Forcing generation is complete:

- Your Self Forcing video will be saved automatically in the

Comfyui > outputfolder inside your ComfyUI directory. - Self Forcing files are stored as video clips (MP4 or image sequences depending on configuration).

Acknowledgement

This workflow uses the Self Forcing model developed by guandeh. The Self Forcing workflow integrates Wan Video Wrapper nodes by kijai to enable seamless Self Forcing video generation inside ComfyUI. Full credit goes to both authors for their original Self Forcing model development and integration work.

GitHub Repository: https://github.com/guandeh17/Self-Forcing