Create photo-based, speech-aligned videos with natural motion

Create photo-based, speech-aligned videos with natural motion

Generate high quality videos from text prompts using Luma Ray 2.

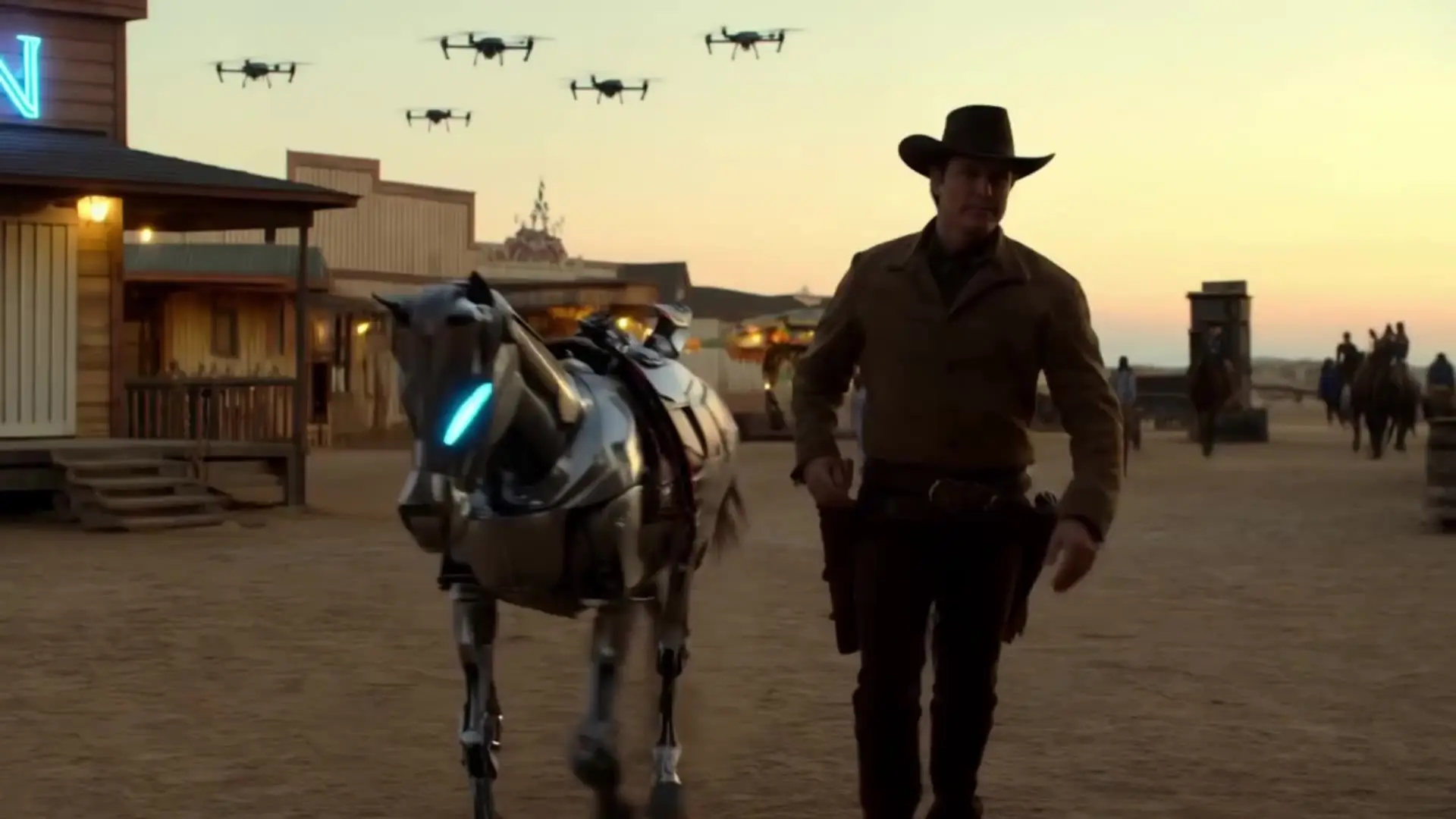

Features smooth scene transitions, natural cuts, and consistent motion.

Generate cinematic shots guided by reference images with unified control and realistic motion.

Generate cinematic videos from text prompts with Seedance 1.0.

Animate images into lifelike videos with smooth motion and visual precision for creators.

One-to-All Animation currently supports outputs up to 720p resolution for video-to-video tasks, with optional 580p and 480p modes for faster generation or lower compute environments. Higher output resolutions may be available in the 14B variant but are typically capped for the 1.3B model to ensure temporal coherence and consistent identity preservation.

Yes. In One-to-All Animation video-to-video generation, prompts are typically limited to around 512 tokens, and only one reference image plus one driving video (pose sequence) can be uploaded at a time. Multiple ControlNet or IP-Adapter style inputs are not natively supported in the 1.3B variant for performance and memory reasons.

After evaluating results in the RunComfy Playground interface, developers can transition One-to-All Animation video-to-video pipelines to production via the RunComfy API. The API mirrors the playground parameters, including prompt, reference, and driving video fields. You’ll need to generate an API key with available USD balance, then call the REST endpoint documented on the RunComfy Developer Portal for automation or integration within larger workflows.

One-to-All Animation stands out for its alignment-free motion transfer, allowing arbitrary layouts between the reference and driving sequences. For video-to-video animation, it excels at identity retention and stable long-sequence generation, performing better than many text-driven competitors like Seedance when the source and target poses differ significantly.

The One-to-All Animation model uses hybrid reference fusion attention and an appearance-robust pose decoder to separate identity from motion dynamically. In video-to-video mode, this ensures the character’s key facial and costume details remain coherent, even when the driving video introduces new or complex poses.

One-to-All Animation supports both, depending on the style of the reference image. For instance, a stylized 2D character reference in a video-to-video animation workflow will retain its drawn characteristics throughout motion transfer, while photorealistic references will yield more lifelike results. The model is optimized for cross-style pose replication without misalignment artifacts.

The 1.3B version of One-to-All Animation targets accessibility and speed while maintaining moderate quality for video-to-video tasks. The 14B model supports sharper textures and higher resolutions (up to or beyond 1080p in some deployments), but it requires more compute and memory. For lightweight production pipelines, most developers use the 1.3B variant.

Yes, commercial usage of One-to-All Animation video-to-video outputs is generally permitted through licensed deployment on approved platforms like Fal.ai and RunComfy. However, you should review the specific license terms on the model’s official Hugging Face or Fal.ai page to verify rights for derivative content or resale.

The One-to-All Animation model’s alignment-free pipeline tolerates varied aspect ratios between the reference image and motion-driving video-to-video input. It auto-normalizes poses spatially, ensuring smooth motion alignment and minimal distortion, though extremely wide or tall ratios might slightly reduce compositional fidelity.

One-to-All Animation introduces a token replacement mechanism that stabilizes long video-to-video sequences by progressively updating temporal tokens rather than re-encoding each frame independently. The result is fewer flickers and smoother transitions across complex motion arcs while retaining character details.