1. What is the ComfyUI Uni3C Workflow?

The Uni3C workflow integrates the advanced Uni3C (Unifying Precisely 3D-Enhanced Camera and Human Motion Controls) model into the ComfyUI environment. Developed by DAMO Academy (Alibaba Group), Uni3C addresses the fundamental challenge of controllable video generation by unifying camera trajectory control and human motion control within a single 3D-enhanced framework.

Built on the FLUX diffusion transformer architecture and powered by the Wan2.1 foundation model, Uni3C introduces PCDController - a plug-and-play control module that utilizes unprojected point clouds derived from monocular depth estimation. This approach enables precise camera control within Uni3C while maintaining the generative capabilities of large-scale video diffusion models. The Uni3C system employs SMPL-X character models and global 3D world guidance to achieve spatially consistent video generation across both environmental scenes and human characters.

2. Benefits of Uni3C:

- Unified 3D-Enhanced Framework: Uni3C simultaneously processes camera trajectories and human motion in a coherent 3D world space.

- PCDController Architecture: With the lightweight 0.95B parameter controller, Uni3C leverages unprojected point clouds from monocular depth estimation, without compromising the 14B parameter base model.

- SMPL-X Integration: Uni3C provides advanced 3D human body model support.

- Geometric Prior Utilization: Uni3C point cloud-based 3D geometric understanding provides robust camera control from single images.

- Global 3D World Guidance: Uni3C ensures rigid transformation alignment between environmental point clouds and SMPL-X characters for spatially consistent video generation.

3. How to Use Uni3C Workflow

Uni3C operates through video reference extraction, which analyzes reference videos to understand both camera movements and human motions, then applies the patterns to generate new videos from the input images. This approach enables precise control with Uni3C without manual parameter adjustment.

3.1 Method 1: Video-Referenced Camera Control

Best for:

Extracting camera movements from reference videos and applying them to new scenes using Uni3C.

Setup Process:

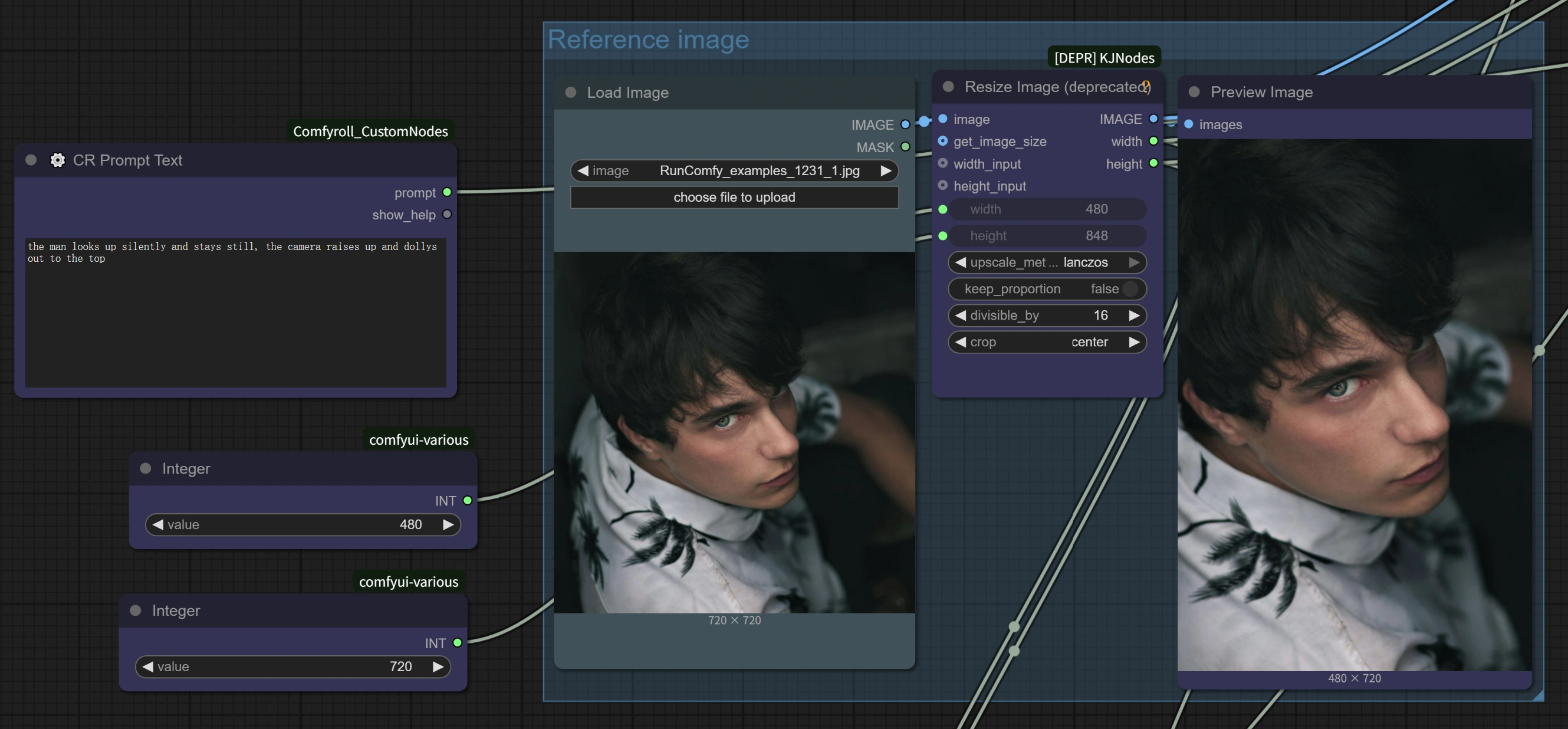

- Load Reference Video: Upload any video with interesting camera movement in the

Load Video (Upload)node- Good Examples: Videos with camera zooming into subjects, walking footage with natural head movement, cinematic pans, orbital shots

- Key Point: Any video with clear camera motion works - from phone recordings to professional cinematography with Uni3C.

- Load Target Image: Upload your base image in the

Load Imagenode (works with any style: realistic, anime, artwork, AI-generated) - Write Your Prompt: Describe your desired scene in the text prompt area for Uni3C

- Configure Settings: The Uni3C workflow includes optimized parameters for 4x speed improvement

- Generate: Run the workflow to transfer the reference video's camera movement to your scene with Uni3C.

Advanced Tips:

- Motion Direction Matching: Best results occur when the reference video's movement direction aligns with your intended scene composition for Uni3C.

- Prompt Coordination: Write prompts that complement the camera movement style for enhanced coherence with Uni3C.

- Reference Quality: Choose reference videos with stable, clear camera movements for optimal results with Uni3C.

3.2 Method 2: Human Motion Transfer

Best for:

Transferring human motions from reference videos to different characters using Uni3C.

Setup Process:

- Reference Video with Human Motion: Upload a video containing the human movements you want to transfer

- Target Character Image: Load an image of the character you want to animate

- Motion Control via Prompts:

- Preserve Original Motion: Use general descriptions like "a woman walking" to maintain the reference motion with Uni3C.

- Modify Actions: Be specific to change movements: "a woman combing her hair" will alter hand gestures while preserving overall motion flow with Uni3C.

Key Advantages:

- No Skeleton Required: Unlike traditional motion capture, Uni3C understands human movement without complex rigging.

- Detail Preservation: Uni3C maintains accessories, hairstyles, and clothing details during motion transfer.

- Simultaneous Control: Both camera movement and human motion are transferred together from the same reference video using Uni3C.

Performance Optimization Architecture: The Uni3C workflow implements several optimization strategies including reduced hidden size from 5120 to 1024 in PCDController, zero-initialized linear projection layers, and injection of camera-controlling features only into the first 20 layers of the base model. Flow matching optimization with reduced sampling steps (10 vs 20+) and adjusted CFG guidance scales provides up to 4x processing speed improvement in Uni3C while maintaining generation quality.

3.3 Optimized Performance Settings

4x Speed Boost Configuration: Based on Uni3C's built-in optimizations, the following settings provide dramatically faster processing:

WanVideo Sampler Node Settings:

Steps: 10 (reduced from default 20+)CFG: 1.0-1.5 (optimized for speed-quality balance)Shift: 5.0-7.0 (author recommends 7 for best results, 5 for faster processing with Uni3C)Scheduler: UniPC (optimized scheduler for Uni3C)

Key Performance Features:

- AnimateDiff Integration: Uni3C leverages AnimateDiff optimizations originally designed for text-to-video but effective for image-to-video generation.

- Smart Parameter Reduction: Since image-to-video starts with existing visual content, fewer denoising steps are required compared to text-to-video generation with Uni3C.

- Optimized Processing: Uni3C enables 70-frame videos to complete in ~4-5 minutes (vs ~27 minutes with original settings).

Quality vs Speed Options:

- Maximum Speed: Steps=10, CFG=1.0, Shift=5 → ~4 minutes for 70 frames with Uni3C

- Balanced: Steps=10, CFG=1.5, Shift=7 → ~5 minutes for 70 frames with Uni3C

- Higher Quality: Steps=15, CFG=2.0, Shift=7 → ~8-10 minutes for 70 frames with Uni3C

3.4 Workflow Components Understanding

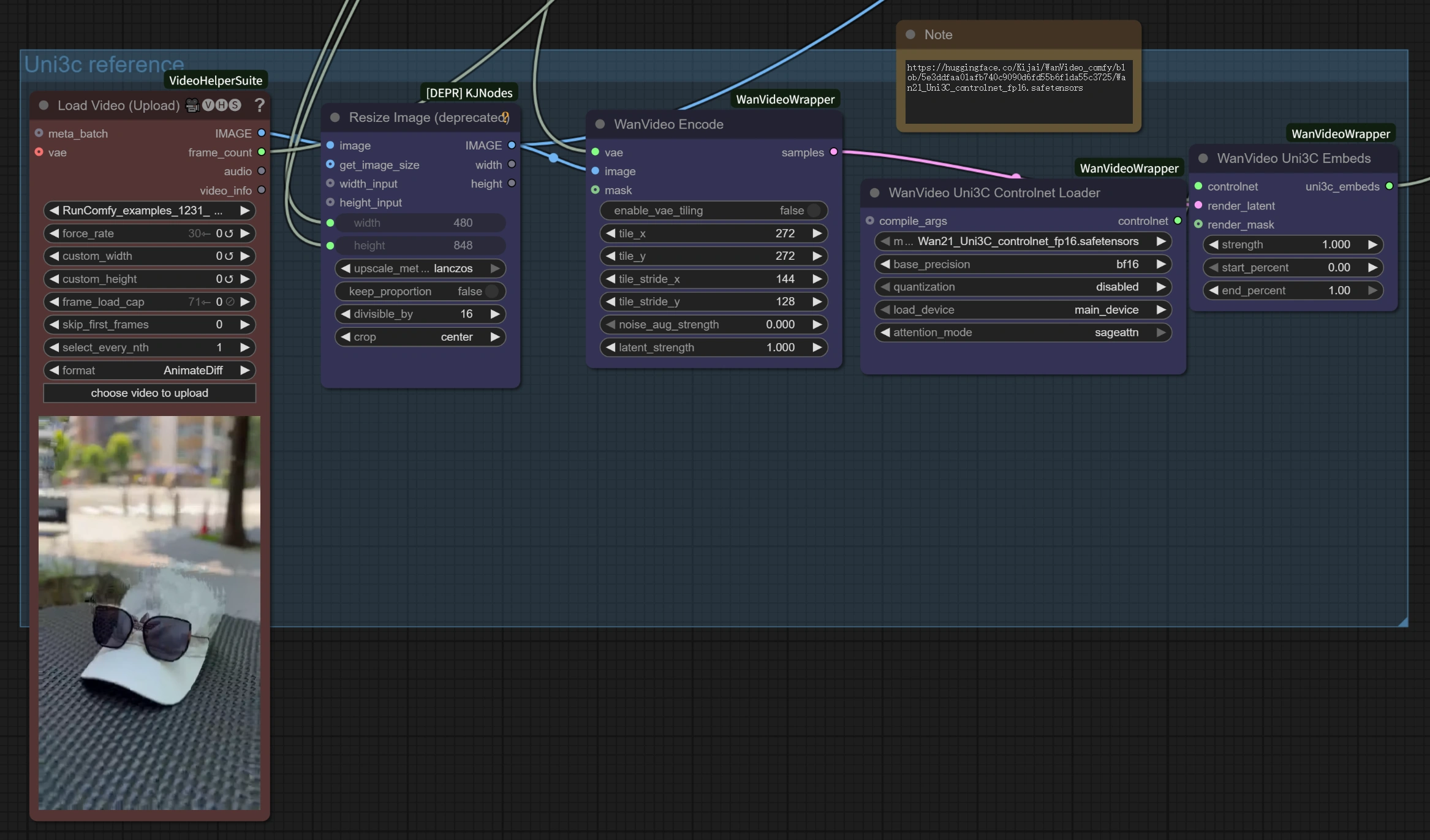

Reference Video Processing Section:

Load Video (Upload): Accepts MP4, AVI and other standard video formats for motion reference in Uni3C.WanVideo Encode: Processes the reference video to extract camera trajectories and motion patterns for Uni3C.Uni3C ControlNet Loader: Loads the specialized Uni3C control model for motion understanding.

Image-to-Video Generation Section:

Load Image: Your target image that will be animated with the reference motion by Uni3C.WanVideo Image/ToVideo Encode: Converts your static image into a format suitable for video generation by Uni3C.WanVideo Sampler: Core generation engine with optimized settings for 4x speed improvement in Uni3C.

Output Processing:

WanVideo Decode: Converts the generated latent video back to viewable format from Uni3C.Video Combine: Assembles the final video file with proper frame rate and encoding from Uni3C.

4. Advanced Tips and Best Practices

Choosing Reference Materials for Uni3C

- For Motion Transfer: Select videos with clear, visible movements where the person stays mostly in frame for Uni3C.

- For Camera Control: Any video with interesting perspective or desired camera movement for Uni3C.

- Best Results: When the reference motion direction matches your intended output direction with Uni3C.

Prompt Engineering Best Practices for Uni3C

- "Don't Disturb" Principle: For pure motion transfer without character changes, use simple, general prompts with Uni3C.

- Specific Action Changes: Be detailed when you want to modify what the character is doing with Uni3C.

- Character Consistency: Focus prompts on maintaining character appearance with Uni3C.

Quality Optimization for Uni3C

- Motion Consistency: Avoid sudden changes in reference videos for smoother results with Uni3C.

- Frame Stability: Ensure reference materials have consistent lighting and framing for Uni3C.

5. Acknowledgments

This workflow is powered by Uni3C, developed by DAMO Academy (Alibaba Group), Fudan University, and Hupan Lab. The ComfyUI integration is based on the excellent work by kijai (ComfyUI-WanVideoWrapper), with additional optimizations and workflow design to make this powerful Uni3C technology accessible to creators worldwide.