RunComfy's Cloud is not limited to FLUX.1-dev ControlNet for Reallusion AI Render. You can swap checkpoints, ControlNets, LoRAs, VAEs, and related nodes to run additional Reallusion workflows and models listed below.

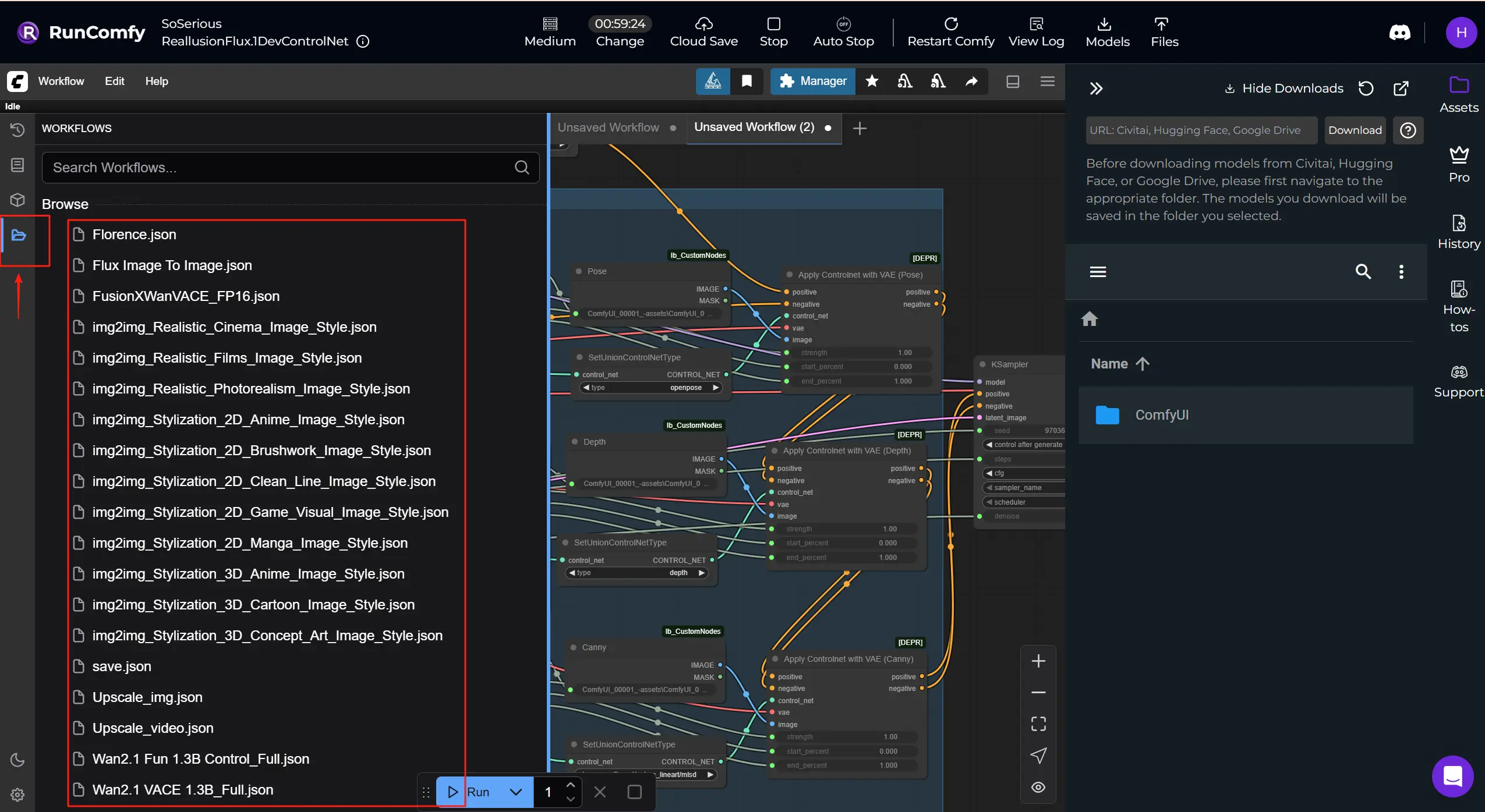

Get More Reallusion ComfyUI Workflows: Get more workflow templates that you can download and run in RunComfy. Click "Workflows" button and try more.

Reallusion is a developer of real-time 2D/3D character creation and animation software, best known for iClone and Character Creator, used across film/TV, games, archviz, digital twins, and AI simulation.

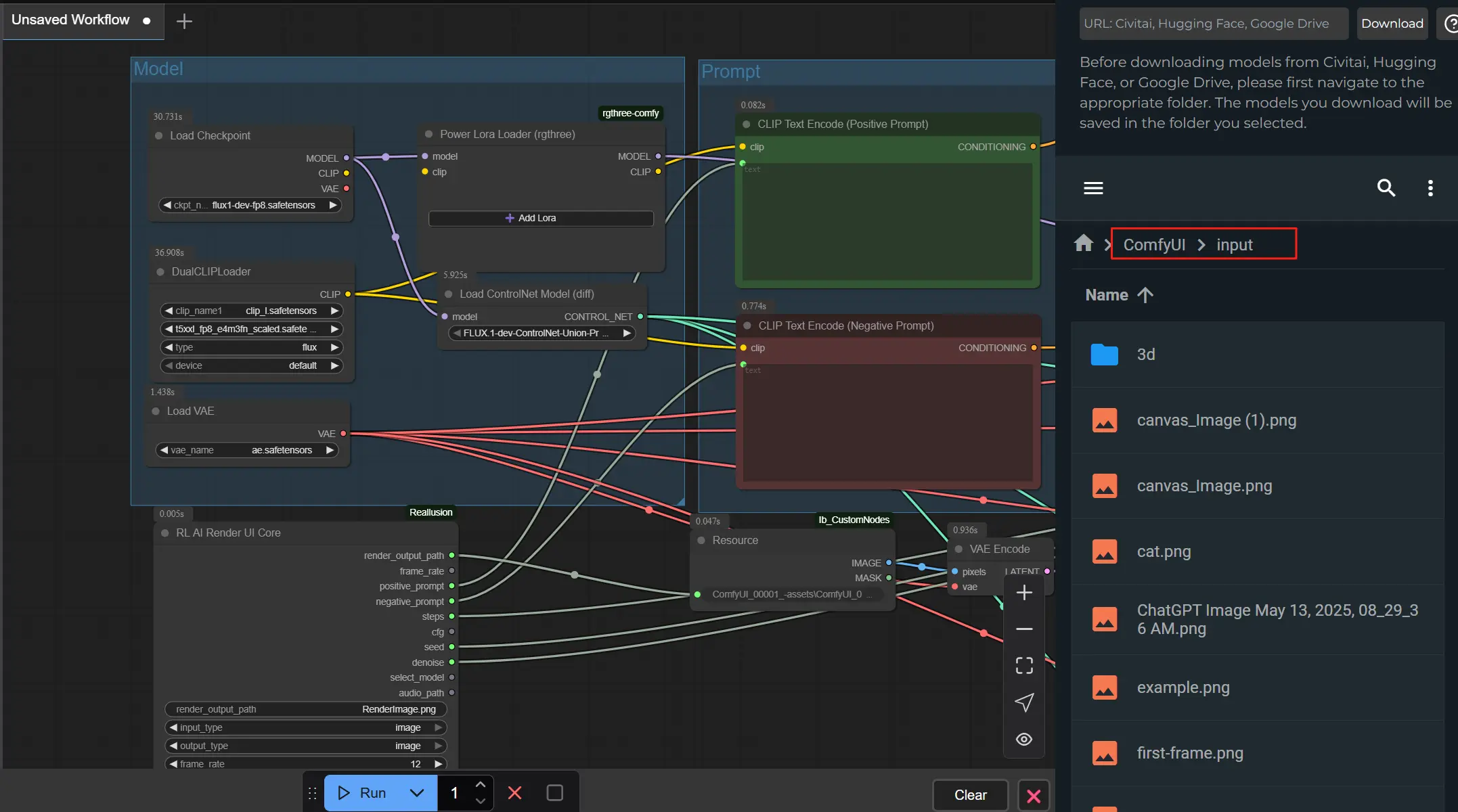

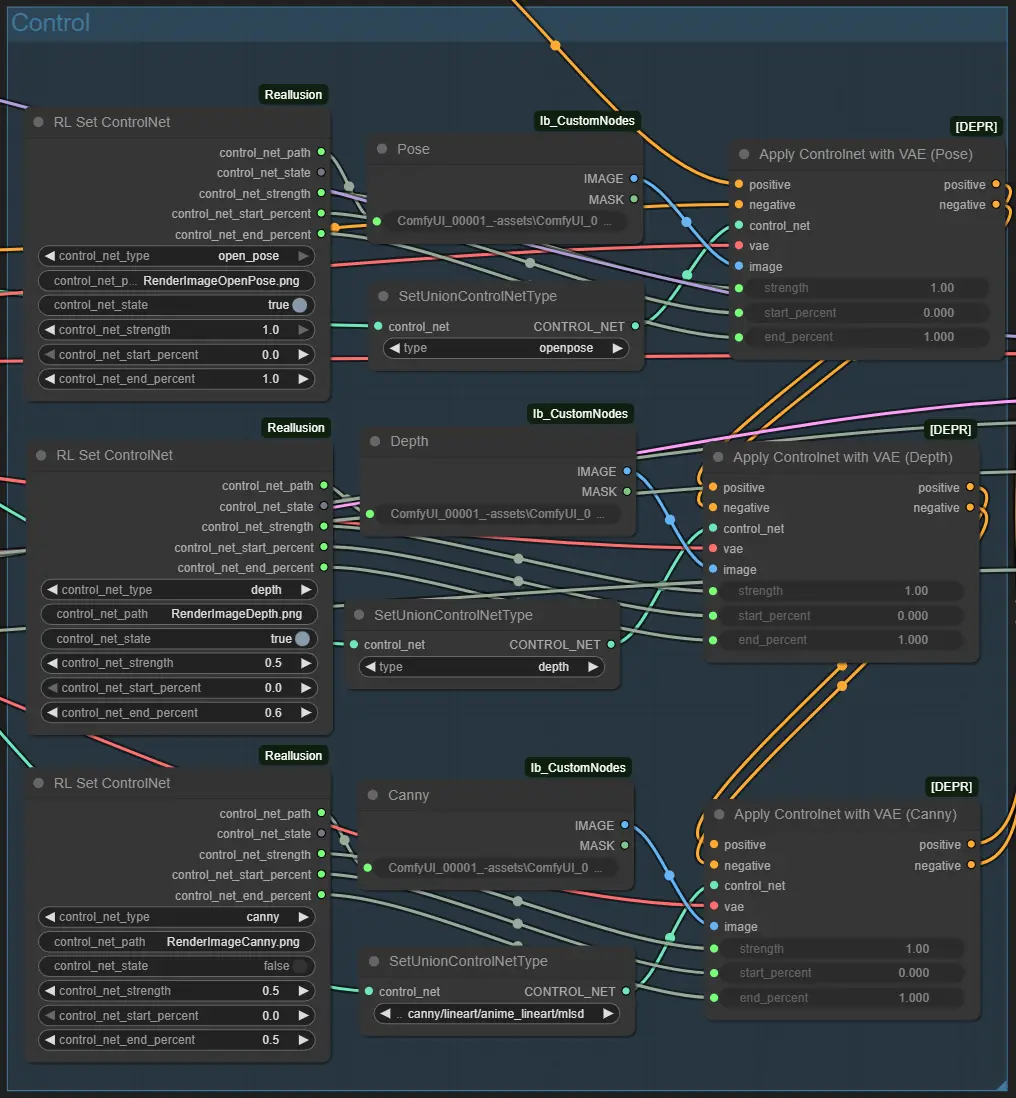

Reallusion AI Render is a seamless bridge between 3D animation software and AI-powered content generation workflows. Think of it as an AI render assistant that listens to layout, pose, camera, or lighting data directly from iClone or Character Creator, then uses that context to automatically craft richly detailed images or videos inside ComfyUI. This integration brings together Reallusion’s real-time 3D creation tools with ComfyUI’s flexible, node-based AI processing architecture, making image- and video-based storytelling both artist-driven and tightly guided by 3D data. Reallusion AI Render supports multimodal inputs like depth maps, normal maps, edge detection (Canny), 3D pose data, and style images via IPAdapter. And thanks to its custom Core, ControlNet, Additional Image, and Upscale nodes, it lets animators and developers render consistent, stylized, high-quality outputs entirely under plugin control, without needing to navigate ComfyUI manually.

By interpreting structured instructions and combining them with internal parameter presets, Reallusion AI Render transforms prompt-based generation into a precise, replicable production process. It's tailor-made for creators in film, games, or commercial storytelling who need consistent characters, fine-grained style control, and frame-accurate AI-assisted sequence rendering.

Direct Plugin Integration: Reallusion AI Render works natively with iClone and Character Creator via a dedicated plugin, enabling real-time feedback and control without leaving your production tools.

3D-Guided ControlNet: Seamlessly map depth, pose, normals, and edge data straight from your 3D scene into ComfyUI with Reallusion AI Render’s ControlNet nodes, achieving cinematic consistency and shot-level control.

Multi-Image Styling Support: Reallusion AI Render Workflow includes Additional Image nodes that support flexible style blending and reference-based direction, making it easy to reuse looks or perform advanced IP-Adaptive rendering.

Smart Upscale Workflow: A dedicated UpscaleData node lets creators define output resolution within the Reallusion AI Render plugin, ensuring final renders match project specifications without guessing.

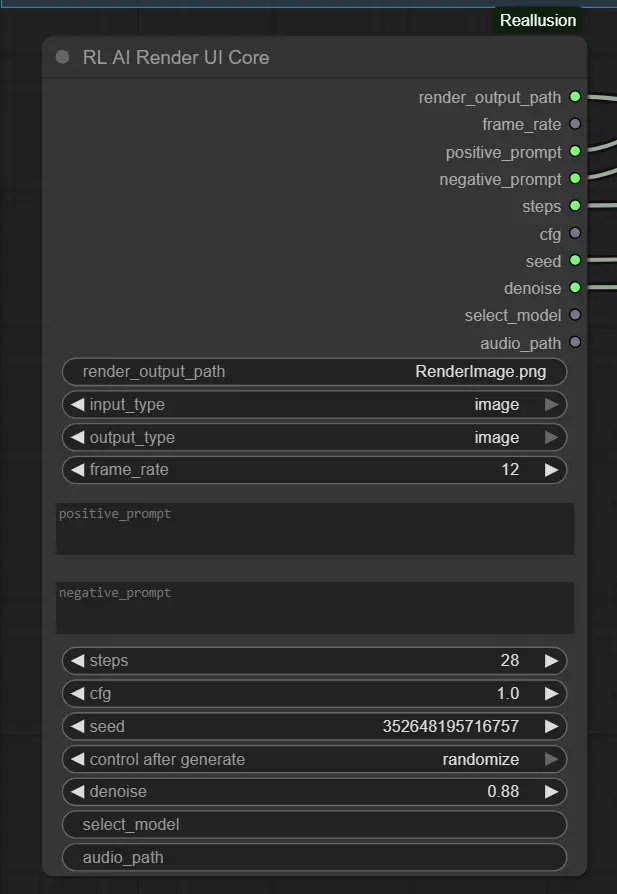

Full Workflow Automation: Unlike generic ComfyUI workflows, Reallusion AI Render is designed for automation, parameters like model, sampling steps, CFG, and audio sync are passed programmatically, ready for batch rendering or custom presets.

Production-Ready Character Consistency: Paired with LoRA training and IP creation tools, Reallusion AI Render preserves facial integrity and visual fidelity across video sequences, making it ideal for AI-powered storytelling.

Reallusion AI Render Basic Workflow

1.Get Your Image Ready

Export any image from Reallusion iClone or Character Creator using their AI Render Plugin. Make sure the image is saved using the default plugin path structure. This ensures all guidance/control images (e.g., pose, depth) are automatically generated and linked correctly.

Please upload the images into the ComfyUI -> input folder. Make sure the file names are consistent with the render_output_path specified in the Node.

2.Set Prompts and Style

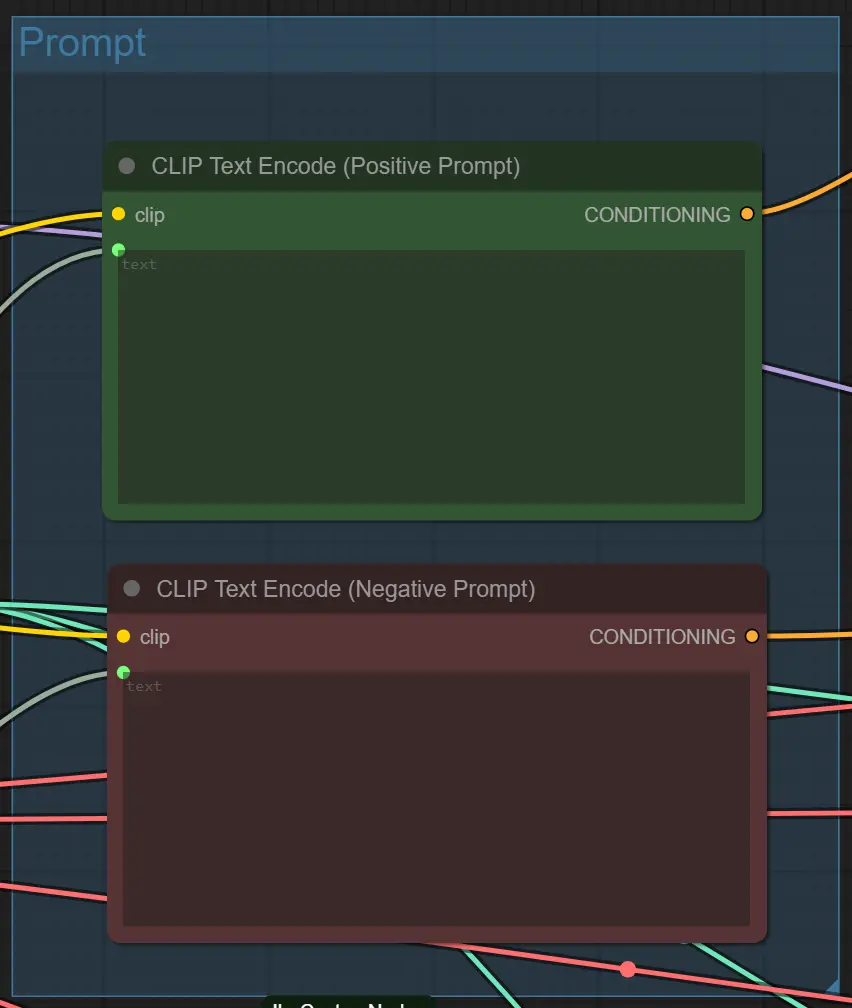

Write a clear positive prompt (what you want) and a negative prompt (what to avoid) inside the iClone or Character Creator AI Render plugin UI. The workflow will use these to guide the output’s look and detail.

3.Connect the machine and use AI Render-⭐IMPORTANT

To begin, launch the AI Render plugin inside iClone or Character Creator, and connet ComfyUI Cloud to AI Render by copy and paste the id from the RunComfy URL. Once connected, the plugin will automatically send your previewed images or videos from the ComfyUI pipeline.

This setup ensures that AI Render communicates seamlessly with your machine, letting you preview, adjust, and refine results in real time. By keeping the connection stable, your pose, depth, and style guidance will stay accurate across every render.

👉 Watch the full step-by-step video guide here: YouTube Tutorial

Nodes Essential Settings

1. Prompt & Quality Settings – RL AI Render UI Core Node This node collects the main parameters from the AI Render Plugin.

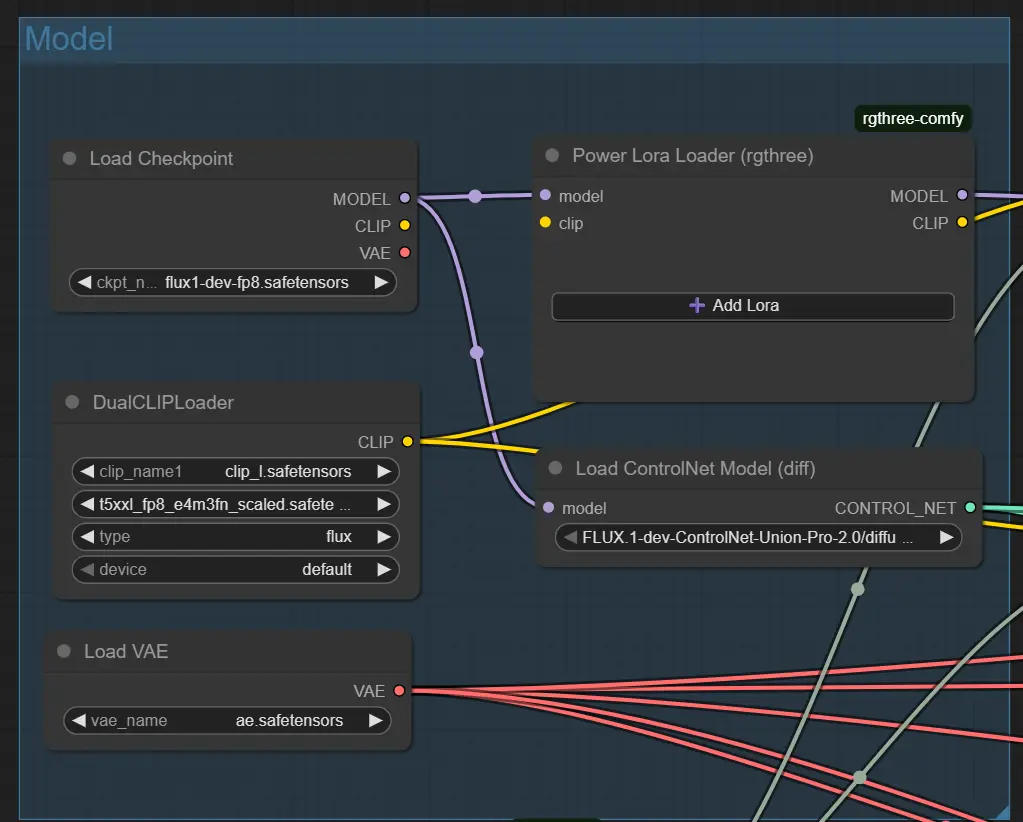

2. Base Model Settings – Load Checkpoint Node

This loads your chosen model (e.g., Flux 1 Dev ControlNet).

ckpt_name: Must match the model expected by your style (default: flux1-dev-fp8.safetensors).

Use models that support IPAdapter or ControlNet for best results.

3. Structure Guidance – ControlNet Nodes (Pose, Depth, Canny) Each ControlNet node loads a specific input file (e.g., pose image); only active if control is enabled.

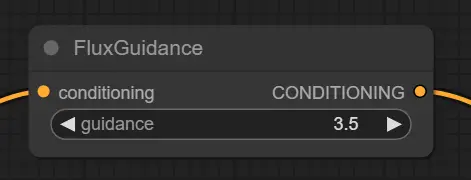

4. Prompt Conditioning – Flux Guidance Node Adjusts how the prompt shapes the image generation.

5. Text Prompt Encoding – CLIP Text Encode Nodes Generates vector guidance from your prompts.

Advanced Tips for Nodes

Prompt Clarity

Avoid overly complex or vague prompts. Use direct styles like cinematic lighting, anime, or cyberpunk alley. Be specific for more controlled results.

Balance Denoise and Structure If ControlNet is enabled (e.g. Depth + Pose), a high denoise like 0.88 may disrupt structure. Try 0.6–0.75 when preserving layout is a priority.

Match Inputs with ControlNet

Only enable a ControlNet if a matching guidance image exists (e.g., RenderImageDepth.png for Depth). Mismatch can cause failed prompts or empty results.

This workflow integrates the Flux-FP8 model developed by Kijai with performance optimization techniques outlined by the Reallusion Team in their official AI rendering workflow guide. Special recognition to Kijai for their contribution to model development and to Reallusion for sharing valuable insights that enhance AI rendering efficiency in ComfyUI workflows.

Explore technical resources and documentation related to Reallusion AI Render.

RunComfy is the premier ComfyUI platform, offering ComfyUI online environment and services, along with ComfyUI workflows featuring stunning visuals. RunComfy also provides AI Playground, enabling artists to harness the latest AI tools to create incredible art.