What is MimicMotion

MimicMotion is a controllable video generation framework developed by researchers at Tencent and Shanghai Jiao Tong University. It can generate high-quality videos of arbitrary length following any provided motion guidance. Compared to previous methods, MimicMotion excels in producing videos with rich details, good temporal smoothness, and the ability to generate long sequences.

How MimicMotion Works

MimicMotion takes a reference image and pose guidance as inputs. It then generates a video that matches the reference image's appearance while following the provided motion sequence.

A few key innovations enable MimicMotion's strong performance:

- Confidence-aware pose guidance: By incorporating pose confidence information, MimicMotion achieves better temporal smoothness and is more robust to noisy training data. This helps it generalize well.

- Regional loss amplification: Focusing the loss more heavily on high-confidence pose regions, especially the hands, significantly reduces image distortion in the generated videos.

- Progressive latent fusion: To generate smooth, long videos efficiently, MimicMotion generates video segments with overlapping frames and progressively fuses their latent representations. This allows generating videos of arbitrary length with controlled computational cost.

The model is first pre-trained on large video datasets, then fine-tuned for the motion mimicking task. This efficient training pipeline does not require massive amounts of specialized data.

How to Use ComfyUI MimicMotion (ComfyUI-MimicMotionWrapper)

After testing different MimicMotion nodes available in ComfyUI, we recommend using kijai's ComfyUI-MimicMotionWrapper for the best results.

Step 1: Preparing Your Input for MimicMotion

To start animating with ComfyUI MimicMotion, you'll need two key ingredients:

- A reference image: This is the initial frame that serves as the starting point for your animation. Choose an image that clearly depicts the subject you want to animate.

- Pose images: These are the images that define the motion sequence. Each pose image should show the desired position or pose of your subject at a specific point in the animation. You can create these pose images manually or use pose estimation tools to extract poses from a video.

🌟Ensure that your reference image and pose images have the same resolution and aspect ratio for optimal results.🌟

Step 2: Loading the MimicMotion Model

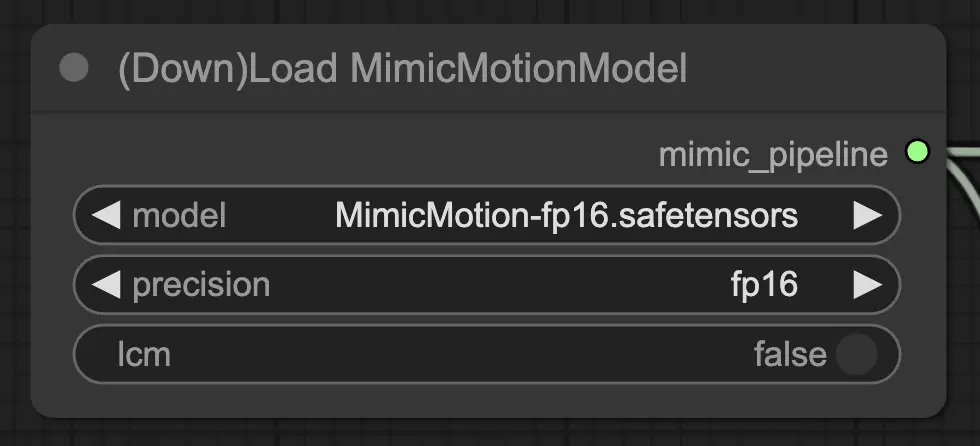

ComfyUI MimicMotion requires the MimicMotion model to function properly. In RunComfy, the model is already preloaded for your convenience. To configure the "DownLoadMimicMotionModel" node, follow these steps:

- Set the "model" parameter to "MimicMotion-fp16.safetensors" (or the appropriate model file name, if different).

- Select the desired precision (fp32, fp16, or bf16) based on your GPU capabilities. This choice can impact performance and compatibility.

- Leave the "lcm" parameter set to False, unless you specifically want to use the LCM (Latent Conditional Motion) variant of the model.

Once you have configured the node settings, connect the output of the "DownloadAndLoadMimicMotionModel" node to the input of the next node in your workflow. This will ensure that the loaded MimicMotion model is properly utilized in the subsequent steps of your ComfyUI pipeline.

Step 3: Configuring the MimicMotion Sampler

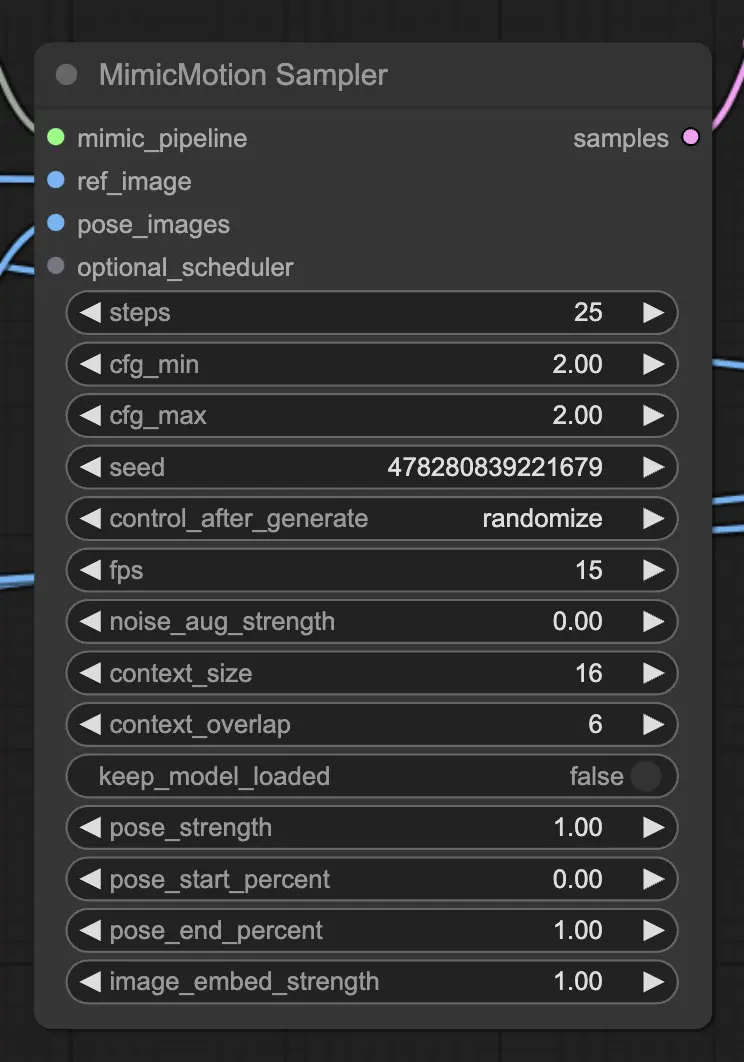

The "MimicMotionSampler" node is responsible for generating the animated frames based on your input. Here's how to set it up:

- Add the "MimicMotionSampler" node and connect it to the output of the "DownloadAndLoadMimicMotionModel" node.

- Set the "ref_image" parameter to your reference image and the "pose_images" parameter to your sequence of pose images.

- Adjust the sampling settings according to your preferences:

- "steps" determines the number of diffusion steps (higher values lead to smoother results but longer processing times).

- "cfg_min" and "cfg_max" control the strength of the conditional guidance (higher values adhere more closely to the pose images).

- "seed" sets the random seed for reproducibility.

- "fps" specifies the frames per second of the generated animation.

- Fine-tune additional parameters like "noise_aug_strength", "context_size", and "context_overlap" to experiment with different styles and temporal coherence.

Step 4: Decoding the Latent Samples

The "MimicMotionSampler" node outputs latent space representations of the animated frames. To convert these latents into actual images, you need to use the "MimicMotionDecode" node:

- Add the "MimicMotionDecode" node and connect it to the output of the "MimicMotionSampler" node.

- Set the "decode_chunk_size" parameter to control the number of frames decoded simultaneously (higher values may consume more GPU memory).

The output of the "MimicMotionDecode" node will be the final animated frames in image format.

Step 5: Enhancing Poses with MimicMotionGetPoses

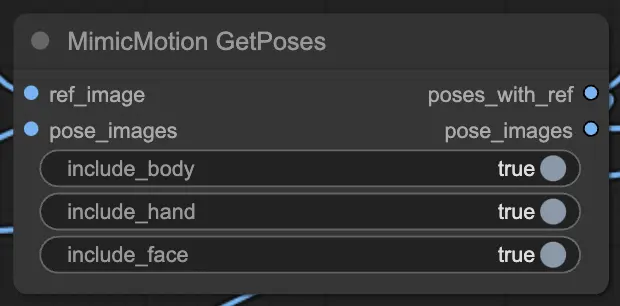

If you want to visualize the extracted poses alongside your reference image, you can use the "MimicMotionGetPoses" node:

- Connect the "ref_image" and "pose_images" to the "MimicMotionGetPoses" node.

- Set the "include_body", "include_hand", and "include_face" parameters to control which pose keypoints are displayed.

The output will include the reference image with the extracted pose and the individual pose images.

Tips and Best Practices

Here are some tips to help you get the most out of ComfyUI MimicMotion:

- Experiment with different reference images and pose sequences to create a variety of animations.

- Adjust the sampling settings to balance quality and processing time based on your needs.

- Use high-quality, consistent pose images for the best results. Avoid drastic changes in perspective or lighting between poses.

- Monitor your GPU memory usage, especially when working with high-resolution images or long animations.

- Take advantage of the "DiffusersScheduler" node to customize the noise scheduling for unique effects.

ComfyUI MimicMotion is a powerful and versatile tool that enables you to effortlessly create stunning animations. By understanding the workflow and exploring the various parameters, you'll be able to animate anyone with ease. As you delve into the world of animation, remember to experiment, iterate, and have fun throughout the process. With ComfyUI MimicMotion, the possibilities are endless, so enjoy bringing your creative visions to life!