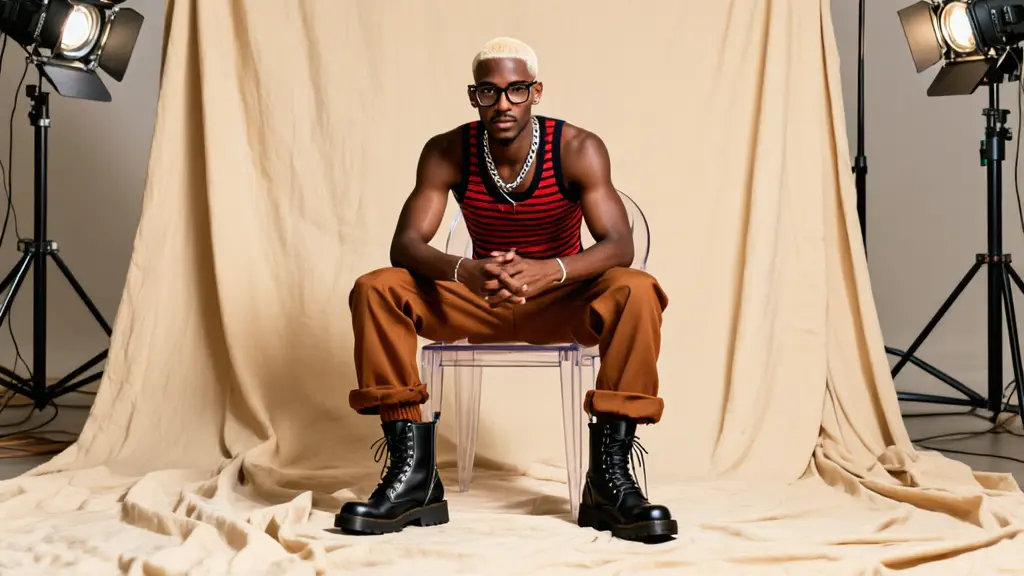

Generate studio-grade visuals with 4K clarity, creative control, and smart adaptive lighting

Generate studio-grade visuals with 4K clarity, creative control, and smart adaptive lighting

Generate photorealistic images from text with Google Imagen 4 Ultra.

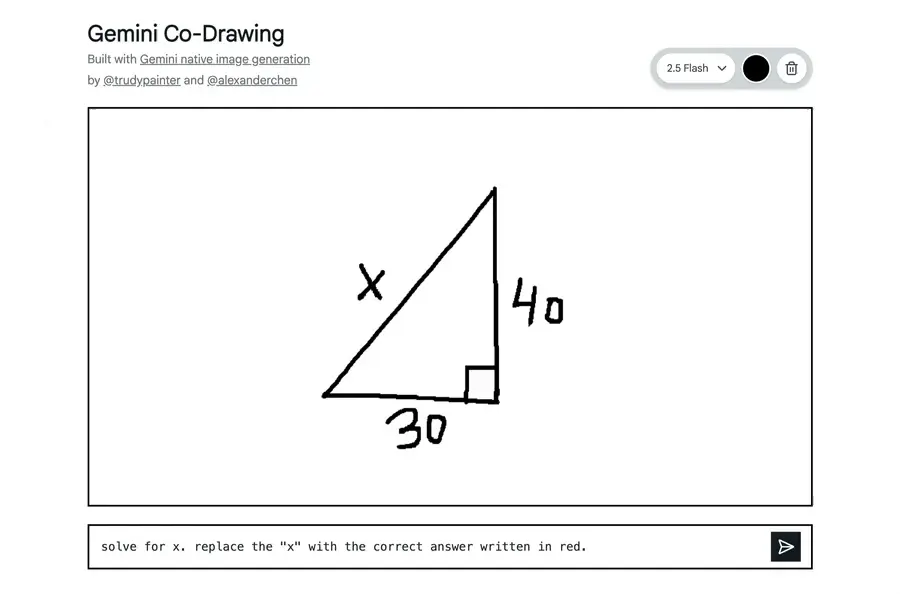

Edit and blend images with prompts using Google Nano Banana.

Refine images with adaptive style control, LoRA merging, and high-res rendering for consistent design output.

Advanced relighting and multi-image fusion tool with fast ControlNet support for detailed, consistent design results.

Create photorealistic, text-accurate visuals with precise prompt control.

Longcat Image, as a text-to-image model developed by Meituan, is distributed under the Open RAIL license. This means commercial use is permitted only if it aligns with the license conditions specified by the model creator. Using Longcat Image via RunComfy does not override or bypass those original terms—you must still comply with the model’s explicit commercial rights and attribution policies listed on longcatai.org.

Longcat Image currently supports output resolutions up to approximately 4 megapixels (e.g., 2048×2048). Aspect ratios can vary but are constrained to a 1:2 to 2:1 range, and prompts are limited to 512 tokens per text-to-image job. Control references (such as ControlNet or IP-Adapter inputs) are capped at two simultaneous sources per generation to preserve GPU memory efficiency.

Once you are satisfied with your text-to-image experiments in the RunComfy Playground, you can export your setup into code snippets provided in Python or NodeJS directly from the interface. The Longcat Image API mirrors the same parameters and generation pipeline as the playground. You will need to use your RunComfy API key, manage usage credits (usd), and implement error handling for production-grade reliability.

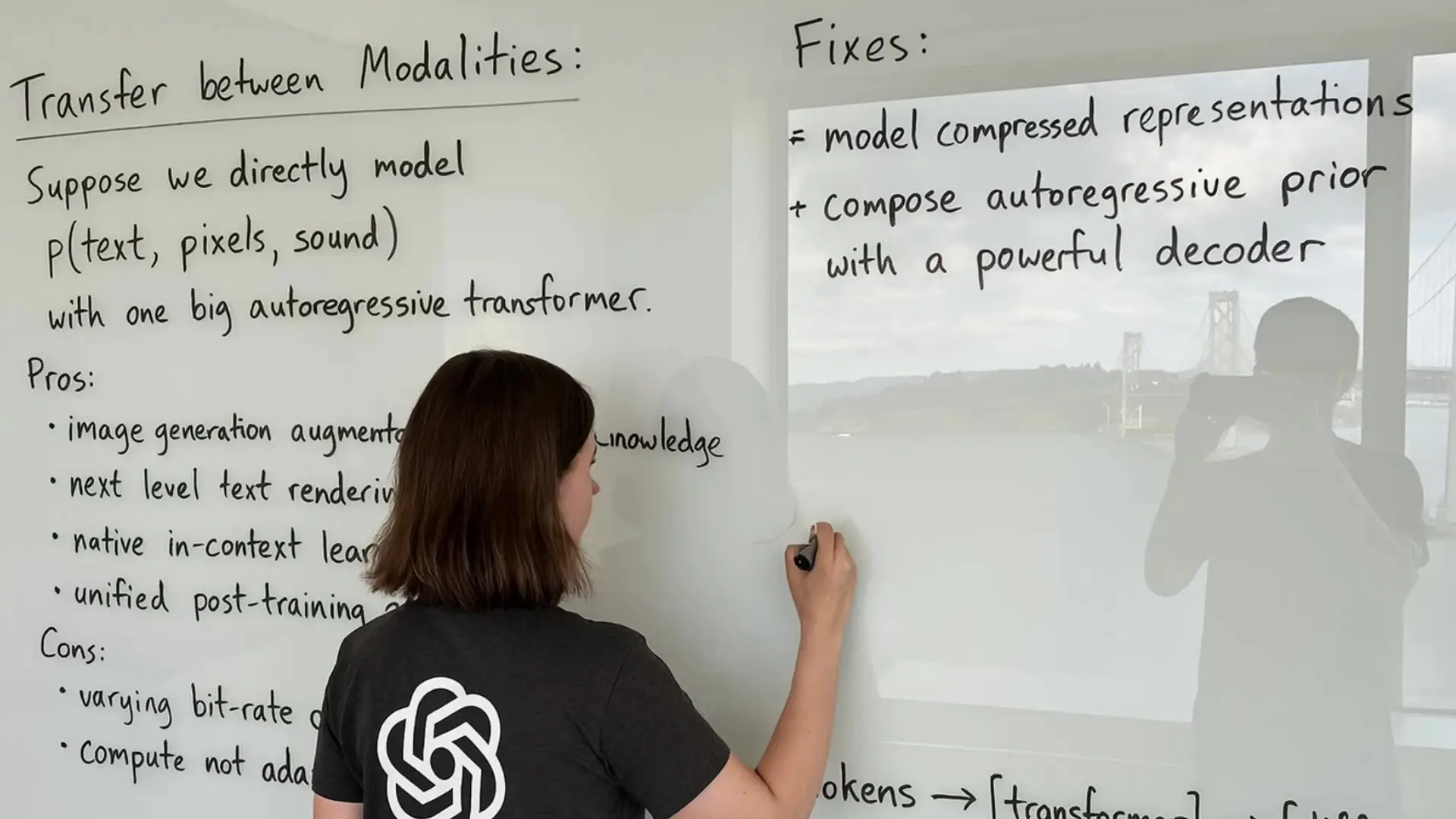

Longcat Image introduces a DiT-based hybrid architecture and a VLM encoder that boosts its text-to-image precision, especially for complex multilingual prompts and Chinese typography. It also integrates generation and editing seamlessly within the same workflow, producing studio-quality results with consistent lighting and textures across multiple edit rounds.

RunComfy operates on a credit-based system called usd. New users receive free trial credits to explore the Longcat Image text-to-image features, after which additional usd can be purchased as per the Generation section in your dashboard. API and Playground both consume credits proportionally to resolution and complexity.

If Longcat Image text-to-image requests take longer to process, it may be due to high concurrency periods. RunComfy auto-queues jobs and scales instances, but for high-volume or low-latency production needs, you can upgrade to a dedicated GPU plan. Contact hi@runcomfy.com for infrastructure-level assistance or to reserve faster GPU tiers.

Yes. The Longcat Image text-to-image API replicates the exact same inference graph and sampling parameters as the playground. This ensures that visual outputs remain consistent when moving from prototype to automated production environments.